Artificial intelligence is rapidly reshaping the enterprise security landscape. From predictive analytics to generative assistants, AI now sits inside nearly every workflow that once belonged only to humans. For CIOs, CISOs, and information security leaders, especially in regulated industries and the public sector, this shift has created both an opportunity and a dilemma: how do you innovate with AI at speed while maintaining the same rigorous trust boundaries you’ve built around users, devices, and data?

The answer lies in the convergence of AI Governance and Zero Trust Architecture (ZTA). Together, they form the foundation of Zero Trust Governance, a unified model for securing, auditing, and proving accountability in the age of intelligent systems.

Security and tech leaders often face two conflicting pressures: move fast to harness AI and stay safe to meet regulatory and mission demands. The fear of missing out drives organizations to deploy AI without guardrails, and fear of compliance failure or data breach can then stall innovation.

The convergence of Zero Trust Governance breaks this cycle. It allows organizations to move fast and prove control, turning fear into confidence and compliance into a competitive differentiator.

The Problem: AI Broke the Old Trust Model

Zero Trust was designed to protect distributed users and cloud workloads under the principle of never trust, always verify. But AI has changed the equation.

Modern AI systems are not passive applications—they’re active decision-makers and actors. They process sensitive data, initiate transactions, generate content, and even make autonomous recommendations that can alter business outcomes. Yet most organizations still treat them as “software,” not as privileged entities that require identity, access control, and monitoring.

But unlike humans in security or IT roles, who are trained to question, escalate, and validate, AI systems are designed to be agreeable. This “sycophantic” tendency means that AI will often comply with a user’s prompt or instruction without considering whether the action violates policy, exceeds privileges, or introduces risk. Where a human administrator might challenge an improper request, an AI system is likely to execute it obediently unless governance and Zero Trust controls intervene.

Without governance, this creates several vulnerabilities:

- Shadow AI: Unapproved models, APIs, or third-party AI tools appear in the environment with no oversight.

- Opaque Decisioning: You can verify data inputs but not how a model reaches its conclusions.

- Privilege Sprawl: Models and pipelines frequently operate with excessive permissions or unrestricted data access. A common example is when generative AI or retrieval-augmented generation (RAG) systems are not connected to governance or access control systems. In these cases, user permissions often do not align with the data permissions being queried, creating exposure and breaking Zero Trust assumptions.

- Autonomous Agents: Many agentic AI systems now operate with partial or full autonomy. Even when a human is in the loop, these agents frequently request credentials or permissions on demand, which conflicts with Zero Trust principles that require pre-approval and continuous verification. The alternative, granting blanket permissions upfront, breaks the core assumption of least privilege and can create significant security exposure.

- Compliance Gaps: Security teams can’t map AI use to regulations and standards such as the EU AI Act, HIPAA, or FedRAMP/StateRAMP/IL/CMMC standards, leaving them audit-exposed.

These aren’t hypothetical problems, they’re already driving incidents across sectors.

Why It Matters: From Risk Management to Mission Resilience

For CIOs and CISOs, this convergence is about more than compliance. It’s about resilience.

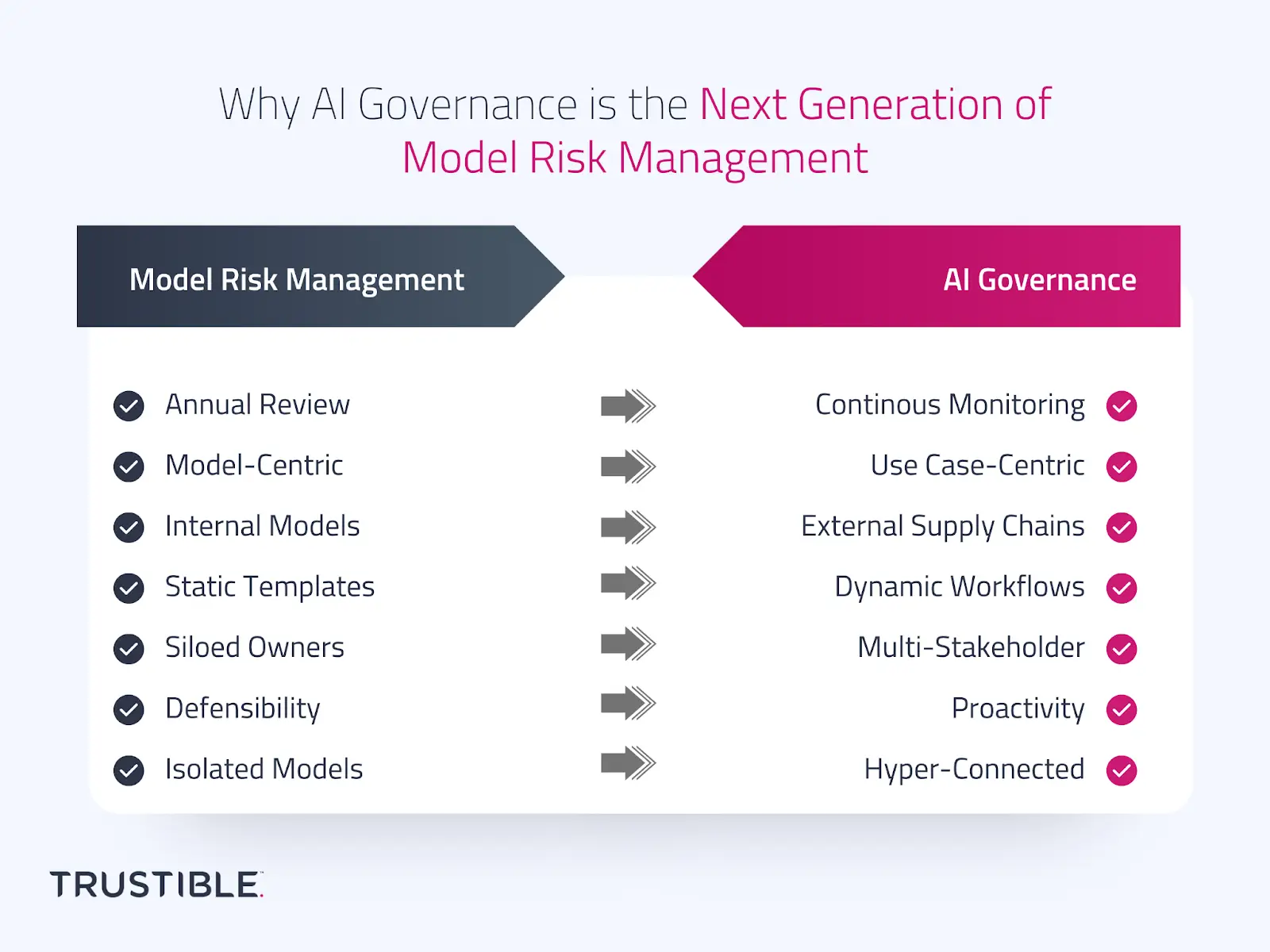

AI’s growing autonomy means traditional perimeter-based security can’t keep pace. In regulated industries and government, AI governance becomes a trust enabler—documenting, enforcing, and verifying how AI systems behave, learn, and evolve.

Organizations that merge Zero Trust principles and AI Governance achieve three key outcomes:

- Comprehensive Visibility – Every AI system, model, or agent becomes a known, managed entity. For example, in one reported incident a rogue AI system processed data without oversight, which only came to light because of post-facto forensic analysis.

- Continuous Verification – Controls aren’t static; they adapt in real-time as models and data change.

- Proof of Trust – Audit trails and governance workflows provide evidence for regulators, customers, and oversight bodies.

This isn’t optional. As regulatory frameworks evolve, including the NIST AI RMF and the EU AI Act, demonstrating governance will become a baseline requirement for doing business.

The Landscape: A Convergence Already Underway

The industry is moving toward a security paradigm where AI Governance and Zero Trust are inseparable.

- Agentic AI systems, autonomous AIs that call APIs or trigger workflows, act like employees with credentials, requiring identity management and activity logging.

- Cloud-native Zero Trust models now include governance and telemetry for AI behavior alongside traditional assets.

- Government guidance from agencies like CISA and NIST increasingly calls for visibility into AI-driven operations and risk posture.

This trend marks a fundamental shift: Zero Trust isn’t just about keeping bad actors out, it’s about ensuring your AI behaves ethically, securely, and predictably inside the network.

What Good Looks Like: A Unified Zero Trust & AI Governance Framework

A mature Zero Trust Governance framework treats AI as a living part of the enterprise trust fabric. Key elements include:

- AI Identity and Inventory

Catalog every AI system, from models to APIs, assigning each an owner, purpose, and risk profile. You can’t protect what you can’t see. - Least-Privilege Access for AI

Restrict who (and what) can train, deploy, or modify models. Integrate IAM with AI lifecycle controls so that AI systems only access the data and tools they need. - Continuous Risk and Impact Assessment

Apply ongoing evaluations for model drift, bias, and performance degradation. Risk assessments aren’t a one-time event, it’s a lifecycle obligation, and both cadenced reviews and real-time assessments for higher-risk systems and models are critical. - Regulatory Mapping and Compliance Reporting

Link your security controls directly to legal and ethical AI obligations. Demonstrate compliance across frameworks like NIST, ISO 42001, and the EU AI Act. - AI Monitoring and Incident Response

Extend observability into model behavior. Detect anomalies, unauthorized actions, or governance violations, and tie them to your broader incident response playbooks.

When these capabilities are combined, your Zero Trust program evolves from a reactive shield into a proactive governance engine.

Tactics to Mitigate AI Risks and Build Resilience

Building effective AI Governance and Zero Trust is all about embedding new habits into your security DNA.

- Discover and Classify AI Systems: Use automated discovery to identify every AI component in your ecosystem. Map dependencies and assign accountability.

- Define Clear AI Use Policies: Codify acceptable use, data-sharing rules, and model deployment standards. Make governance part of the MLOps workflow.

- Automate Where Possible: Automate intake, review, and approval workflows to keep pace with AI adoption.

- Integrate Governance with Security: Feed AI telemetry into SIEM and SOAR systems for real-time monitoring and threat detection.

- Educate and Enforce: Train teams on responsible AI practices and make adherence measurable.

- Measure and Audit Continuously: Use dashboards to monitor model health, compliance status, and risk posture.

The goal is to make AI accountability continuous, visible, and verifiable.

Where Trustible Fits

Trustible is the connective tissue between AI Governance and Zero Trust. Our platform gives enterprises and public agencies the ability to:

- Create a centralized AI registry with full ownership and risk visibility.

- Automate policy enforcement and role-based access for AI systems.

- Run continuous risk and impact assessments aligned with NIST and ISO frameworks.

- Map AI controls to regulations, producing audit-ready evidence for regulators and clients.

- Monitor model behavior, detect drift or misuse, and tie incidents directly into your security response workflows.

Trustible helps leaders operationalize Zero Trust for AI, without adding complexity or slowing innovation.

FAQ

Q: What’s the difference between AI Governance and traditional cybersecurity?

AI Governance ensures not just that systems are secure, but that AI is fair, compliant, and explainable. Cybersecurity protects assets, AI Governance protects decisions and outcomes.

Q: Can Zero Trust principles really apply to AI?

Yes. AI systems can and should be treated as identities: authenticated, authorized, and continuously verified throughout their lifecycle.

Q: How does AI Governance help with compliance?

AI Governance creates the documentation, traceability, and evidence regulators demand. It maps controls to evolving AI-specific laws like the EU AI Act.

Q: What’s the first step for a regulated organization?

Inventory your AI systems and establish ownership. Once you know what exists, you can govern and secure it.

Q: Why act now?

Delaying AI Governance increases your exposure. As AI becomes embedded across operations, retrofitting governance later will be far more costly than building it into systems and operations today.

Final Thought

AI is no longer experimental: it’s embedded everywhere, and the risk vectors are significant. This means the concept of Zero Trust must also evolve to secure and govern the next generation of intelligent systems.

The convergence of AI Governance and Zero Trust isn’t a trend; it’s the next stage in enterprise resilience. By embedding governance into your security architecture now, you’ll move faster, reduce risk, and prove compliance before regulators, and adversaries, force you to.