Prepare your organization for the first

comprehensive AI law in the U.S.

Colorado’s SB205 is the first U.S. law to comprehensively regulate developers and deployers of high-risk systems.

What is SB205?

The NIST AI RMF is the U.S. federal government’s first comprehensive framework to identify and manage risks associated with the development and deployment of AI. Released in January 2023, the NIST AI RMF is organized around four core risk management functions: Govern, Map, Measure, and Manage. Each of the four functions have underlying categories and sub-categories of risk management actions and outcomes. The NIST AI RMF is accompanied by a series of companion documents meant to offer a practical roadmap for organizations to implement the framework.

Key Requirements of SB205?

Requirement

- Developers and deployers of high risk systems must assess their systems for potential discrimination.

How Trustible™ Helps

- Trustible helps organizations identify which AI use cases or models fall within the scope of the law, and provides recommendations on how to measure for potential discrimination and what documentation is necessary to support that.

Requirement

- Developers of high risk systems must implement a risk management program that, among other things, identifies and mitigates known or reasonably foreseeable risks of algorithmic discrimination.

How Trustible™ Helps

- Trustible offers out-of-the-box risk assessments that provide risk and mitigation recommendations. In addition, Trustible can help organizations efficiently conduct a multi-stakeholder risk assessment process to capture inputs from a diverse set of stakeholders.

Requirement

- Deployers of high risk systems must conduct annual impact assessments, as well as after substantial modifications are made to an AI system.

How Trustible™ Helps

- Trustible offers out-of-the-box workflows for conducting impact assessments, and can provide insights and recommendations for appropriate mitigation strategies.

Requirement

- Consumers have a right to contest adverse consequential decisions from the use of a high risk AI system.

How Trustible™ Helps

- Trustible helps organizations document reported incidents and verify if they are relevant, and what AI risks they’re associated with.

Requirement

- Deployers of high-risk AI systems must provide consumers with high level details about the system and its intended purpose.

How Trustible™ Helps

- Trustible can help organizations generate relevant transparency documentation, such as model cards, or use case risk assessments, so that users are appropriately informed.

Requirement

- Developers and deployers of high-risk systems must report algorithmic discrimination stemming from a high risk AI system within 90 days of its detection.

How Trustible™ Helps

- Trustible helps organizations document reported incidents, and offers workflows for reviewing and reporting them to relevant regulators.

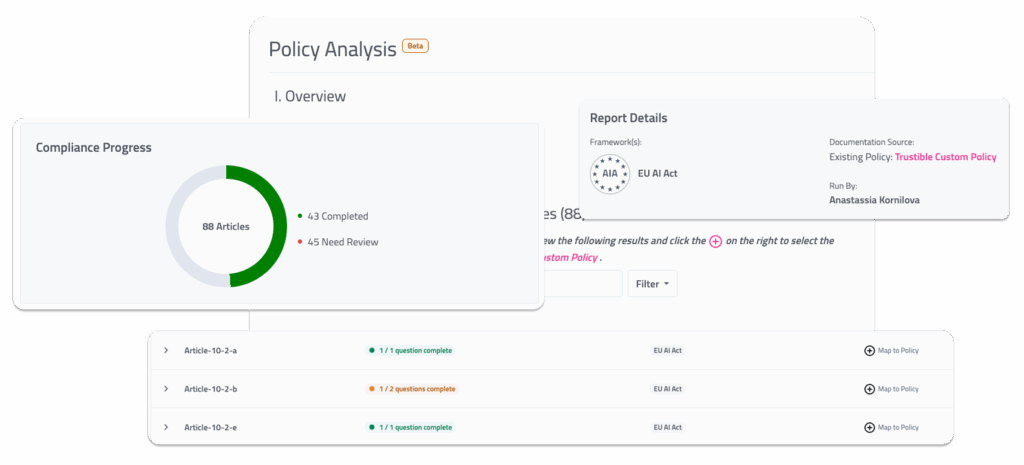

Navigate SB205 with Trustible™

Risk & Impact Assessments

Identify, manage, measure, and mitigate potential risks or harms in your AI systems.

Central Compliance

Implement frameworks to avoid duplicative compliance obligations.

Documentation

Centralize your AI documentation in a single source of truth.

FAQs

The law will take effect on February 1, 2026. However, Colorado Governor Jared Polis signed the law with some reservations and lawmakers are expected to make amendments prior to the effective date.

The law is not exclusive to organizations that are based in Colorado. A developer or deployer is defined as a “person doing business in the state.” Therefore, organizations that have operations in the state of Colorado are expected to comply with the law.

The law does not provide specific penalties for non-compliance. Instead, it empowers the Colorado Attorney General to promulgate rules, which will likely address specific consequences for violating the law.

The law requires that organizations implement a risk management program. Specifically, it identifies the NIST AI RMF and ISO 42001 as appropriate standards to satisfy this requirement, as well as allows for compliance with similarly stringent standards (i.e., the EU AI Act).