In November 2022, Chat GPT brought the AI revolution straight into the hands of everyday consumers. However, if 2022 launched the proliferation and democratization of AI technology, 2023 can be remembered as the year in which policymakers tried to reign in AI.

In the U.S., every branch of the federal government has weighed in on how AI should be developed or deployed. Yet, similar to other tech-related issues (i.e., cybersecurity and privacy) the federal government’s response has been incohesive. Congress has not seriously acted on AI legislation despite holding a series of hearings on AI-related topics throughout the year. The legislative void has left federal agencies to either leverage existing federal law or initiate rule-making proceedings to address the evolving AI landscape. Moreover, while President Biden’s Executive Order on AI sought to make AI oversight more cohesive, it is primarily limited to AI designed, deployed, and procured by the federal government.

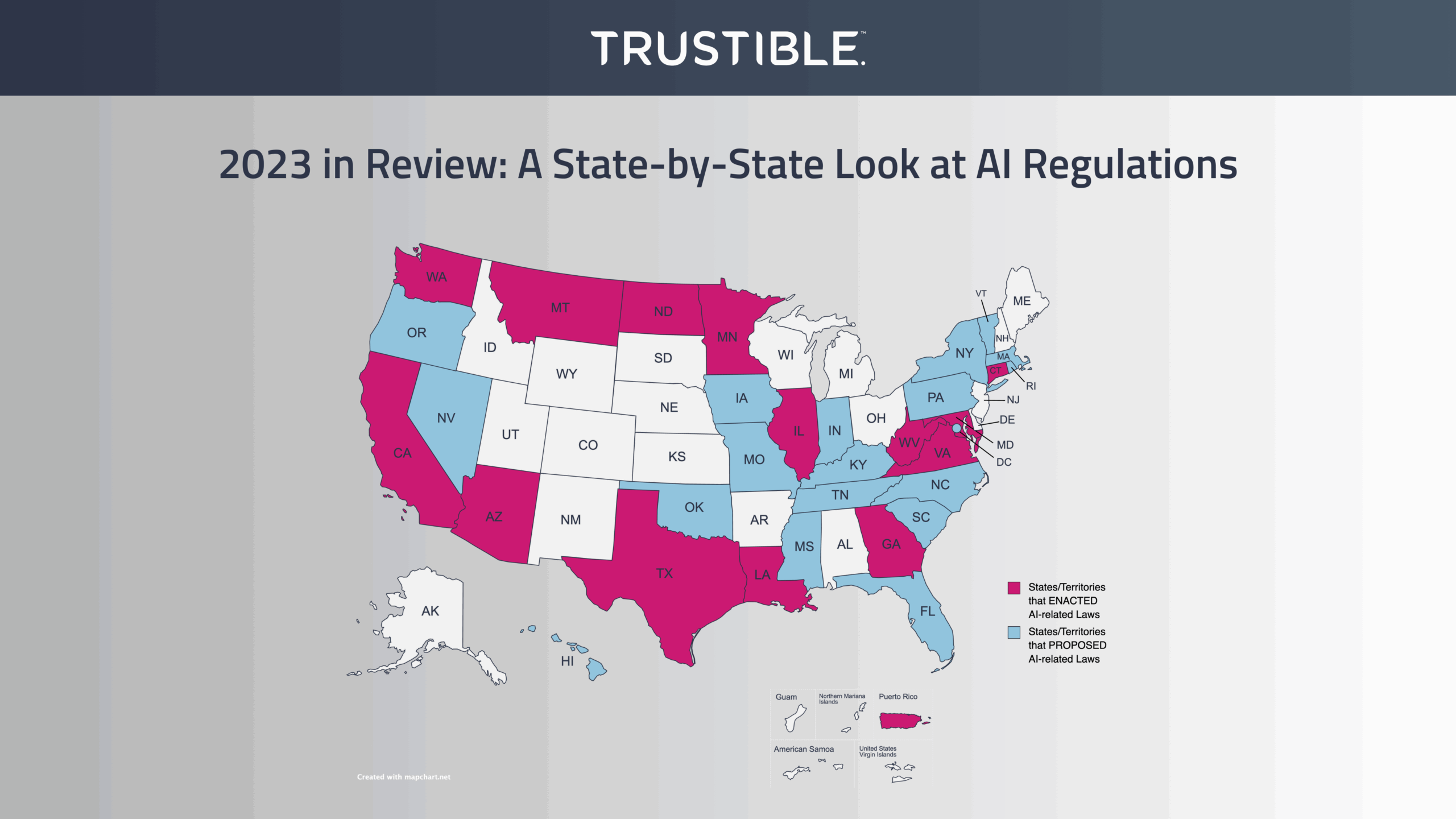

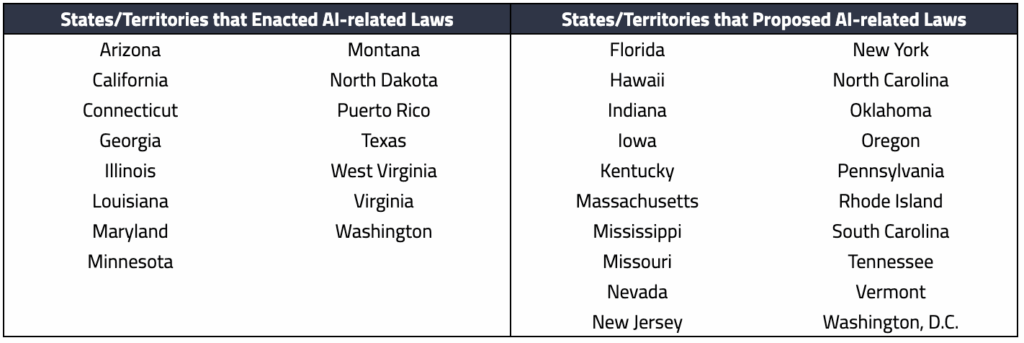

As federal policymakers continue to grapple with how to regulate AI, states are filling the void with their own legislative proposals. The myriad of AI-related legislation from state legislatures in the past year serves as a reminder of how nimble local lawmakers can be when addressing some the most complex topics (i.e., data privacy), as well as the important role states will play in shaping the AI regulatory landscape.

States Lead the Way in Attempting to Regulate AI

Legislators in approximately 33 states, as well as Puerto Rico and Washington D.C., considered nearly 200 bills related to AI in 2023. The vast majority of bills focused on regulating sector-specific AI use cases. Only California and Washington D.C. sought to enact comprehensive AI regulations but were ultimately unsuccessful.

A key theme that emerged among the proposed legislation is oversight for high-risk use cases. Additional themes included ways to address discriminatory outcomes when using AI, limit the reliance on AI decisions, and notify individuals about their interaction with an AI system.

Below we provide an overview of legislation that was either considered or enacted by state legislatures in 2023. We focused on legislation that addressed higher risk use cases as it relates to the public sector, employment, health care, and insurance. Our overview is meant to demonstrate how states are considering AI regulations in these various private and public sector contexts, rather than serve as an exhaustive list of every proposed bill or enacted law.

Government Use

States worked to enact laws that addressed how government agencies use AI technologies. Some laws, such as in Texas and Illinois, created working groups to gain insights and inventorize how state agencies use AI systems. Others sought to comprehensively assess how state agencies developed, used, or procured AI systems.

In June 2023, Connecticut enacted a law that established an Office of Artificial Intelligence (OAI) to annually review and update procedures for state agencies’ design, utilization, and procurement of AI systems that make “critical decisions.” Under the law, critical decisions include such high-risk categories as educational or vocational training, employment, essential utilities, financial services, health care, and housing. The law also requires that impact assessments be conducted on any AI systems that a state agency intends to develop, use, or procure. Beginning on July 1, 2025, the OAI can periodically review any AI system for discrimination or disproportional impact based on certain characteristics (e.g., race, sex, or age) that is developed, used, or procured by a state agency.

In October 2023, California passed a law targeted at state agencies use of high-risk AI systems. Similar to Connecticut, “high-risk” AI systems include those systems that make decisions about housing, employment, education, and health care. The law requires the state’s Department of Technology to conduct an annual inventory of high-risk AI systems that are proposed to be and actually used, developed, or procured by state agencies. The AI inventory must account for the intended benefits of using the AI system, categories of personal information used by the AI system to reach its decisions, as well as mitigation measures in place to address AI risks (e.g., cybersecurity, inaccuracy, and discrimination).

Employment

A number of state legislatures considered bills that tackled how AI is used to make employment-related decisions.

In recent years, regulating how AI is used by employers during the hiring process gained some momentum. Notably, Illinois became a first mover in regulating how AI technology is used in the hiring process when it enacted the Artificial Intelligence Video Interview Act in 2020. The Illinois law requires, among other things, that employers notify job candidates when they use AI to analyze video interviews, explain how the AI technology works, as well as obtain consent from the job candidate to use the technology. Additionally, New York City passed NYC 144 in 2021 that prohibited employers using AI tools during the hiring process unless those tools were subject to an annual bias audit. After a prolonged rule-making process, the New York City law finally took effect on July 5, 2023.

During the 2023 legislative session states continued to explore regulations for how employers use AI in the hiring process. A proposed bill in New York mirrored NYC 144, in that both require AI tools used for employment decisions to undergo an annual bias audit. However, the New York state legislature’s bill required bias audits for AI used in a broader range of employment decisions (e.g., hiring, determining wages, and termination), whereas the New York City law only regulates AI tools used during the hiring process. New Jersey also sought to place restrictions on AI tools used for hiring decisions. The New Jersey state legislature considered a bill that prohibited the sale of an AI tool used for hiring decisions unless the tool had undergone a bias audit and the results of such audit were provided to the purchaser of the tool.

Beyond the use of AI to hire employees, proposed bills in New York and Vermont also restricted how employers can monitor employees during the course of their workday. Both states sought to restrict the electronic monitoring of employees, limit the use of facial recognition technology in the workplace, and limit how employers use of AI to make decisions about such things as disciplinary actions and promotions.

Healthcare

Several states sought to address how AI was used for accessing or delivering health care services.

California considered a bill to prohibit health care plans and insurers from discriminating on the basis of race, color, national origin, sex, age, or disability through the use of clinical algorithms. The bill made an exception for the use of clinical algorithms to make decisions that seek to address health disparities among different groups of people. New Jersey proposed a similar bill that prevented health care providers from using automated decision making tools to discriminate against individuals based on such characteristics as race, national origin, gender, sexual orientation, or age.

Georgia enacted a law that regulated how AI could be used to conduct eye exams, as well as for prescribing glasses or contact lenses. Specifically, the law requires that a licensed optometrist or ophthalmologist use the same standard of care that is expected for in-person eye care when using AI for eye exams. Licensed optometrists or ophthalmologists are also prohibited from relying on AI tools as the sole basis to write a prescription for glasses or contacts.

Illinois proposed a bill that would regulate how hospitals use diagnostic algorithms to diagnosis patients. The bill restricted a hospitals use of diagnostic algorithms unless it certified the algorithm with the Departments of Public Health and Technology and Innovation, demonstrated the algorithm can achieve as or more accurate diagnosis results compared to other diagnostic means, and was not the patients only available diagnostic method. The bill also required that patients were notified about the use of the diagnostic algorithm when used to diagnosis them, were presented with a non-algorithmic diagnosis option, and consented to use the diagnostic algorithm.

Finally, Rhode Island and Massachusetts introduced similar bills on the use of AI in mental health services. The bills would require licensed mental health professionals to seek approval from relevant professional licensing boards prior to AI to provide mental health services. Licensed mental health professionals would be required to notify patients about the use of AI for their mental health services and obtain their consent before using it. Additionally, the AI system being used must be designed to “prioritize the safety and well-being” of the patient, as well as be continuously monitored by the licensed mental health professional to ensure the system’s safety and effectiveness.

Insurance

States sought to enact rules that governed how insurers utilized AI as part of their decision making process. These efforts ranged from regulating all insurance providers to targeting specific types of insurers.

Colorado was a first mover for legislation regulating the use of AI by all insurance providers in the state when it passed SB 21-169 in 2021. The law directed the Colorado Division of Insurance (CO DOI) to adopt risk management requirements that prevent algorithmic discrimination in the insurance industry. In 2023, the CO DOI finalized regulations on how life insurers can use external consumer data and information sources (ECDIS) as a component of the life insurance process in a manner that would not result in unfair discrimination. A more detailed explanation of the new regulation’s impact can be found in our November 2023 post titled “Everything you need to know about the Colorado AI Life Insurance Regulation.”

Rhode Island took a similar approach to the 2021 Colorado law by considering how AI was used by any insurer in the state. Specifically, the proposed bill directed the state’s Department of Business Regulation to issue rules that prevent insurers from using ECDIS, as well as any algorithms or predictive models that use ECDIS, in a way that discriminates based on race, color, national or ethnic origin, religion, sex, sexual orientation, disability, gender identity, or gender expression.

Additionally, some states sought to implement rules for specific types of insurers. New York and New Jersey considered bills that addressed the use of AI by auto insurers. New York proposed to restrict auto insurers from using factors – such as age, marital status, or income level – to adjust any algorithm that determines insurance rates. New Jersey’s proposed bill required auto insurers to submit annual reports to that state’s Department of Banking and Insurance, which showed the insurer’s use of an automated or predictive underwriting system was not discriminating based on race, ethnicity, sexual orientation, or religion.

What is next?

States will continue to be the first movers in the face of the federal government’s paralysis to enact comprehensive AI rules. While only a handful of state bills became law in 2023, it would be imprudent to think that some of the previous bills will not get a reprise in the 2024 legislative session.