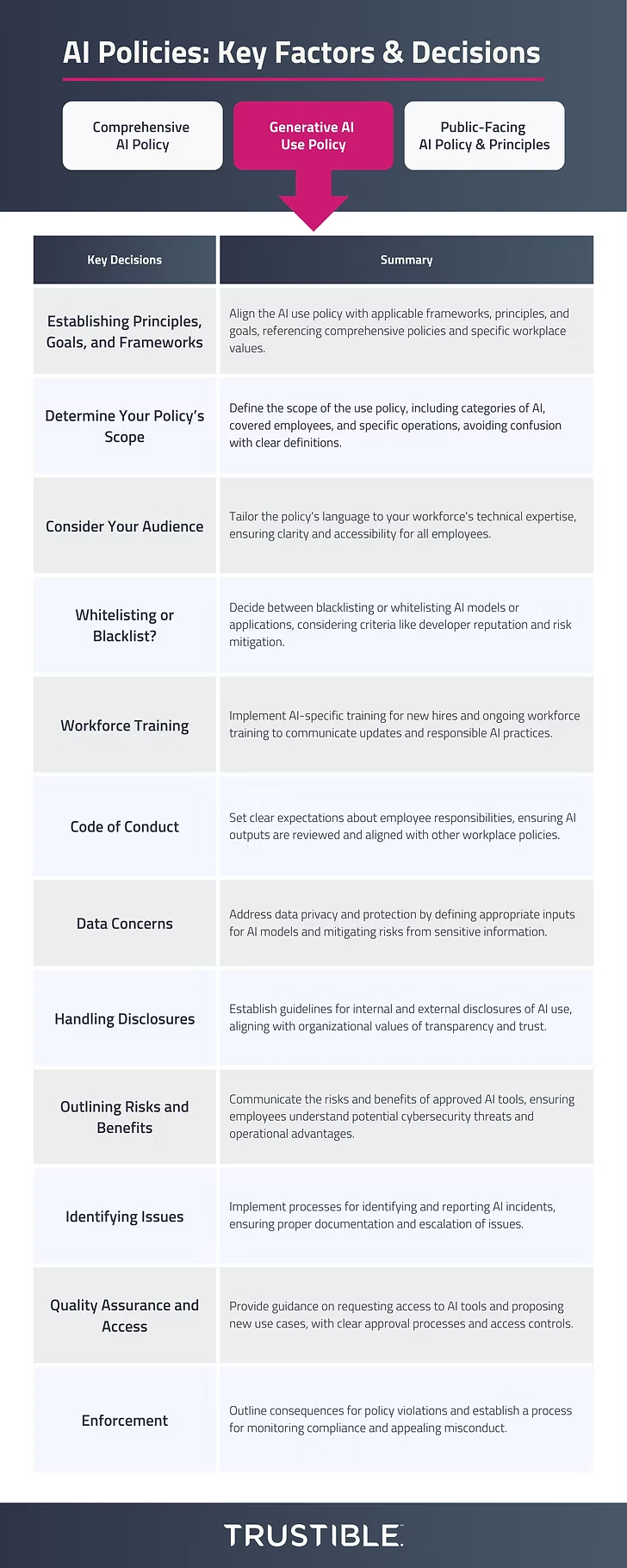

In this series’ first blog post, we broke down AI policies into 3 categories: 1) a comprehensive organizational AI policy that includes organizational principles, roles and processes, 2) an AI use policy that outlines what kinds of tools and use cases are allowed, as well as what precautions employees must take when using them, and 3) a public facing AI policy that outlines core ethical principles the organization adopts, as well as their stance on key AI policy stances. In this second blog post on AI policies, we want to explore critical decisions and factors that organizations should consider as they draft their AI use policy.

A recent study found that the vast majority of employees are using AI during the workday. However, those employees were using what is often called ‘shadow AI,’ which means they are using AI systems without alerting their supervisors. This widespread usage is coming at a time when organizations may lack a clear policy for how their workforce can use AI in their day-to-day functions, or have issued an outright ban on AI use in the workplace. In either instance, the organization is exposing itself to risks that include data privacy concerns or the inability to monitor and mitigate AI harms. Moreover, many employees want to leverage AI to improve their productivity. The lack of clarity for the organization can result in inefficiencies and exposed risks.

To address these concerns, organizations should implement an AI use policy for their employees as a way to provide actionable guidance on the appropriate use of AI in the workplace. An AI use policy differs from a comprehensive AI policy in that it governs how an organization’s employees are permitted to use AI in the workplace. While there may be some aspects that are consumer facing, such as disclosing when AI is being used, this type of policy is intended to be internal to the organization. An effective AI use policy will serve as a key reference for employees seeking answers to questions about appropriate AI tools or usage.

An AI use policy also recognizes that AI can provide value in the workplace while establishing effective guidance and oversight to use the technology. Organizations should consider implementing AI use policies as a mechanism to improve transparency and the responsible use of AI at work, rather than imposing an outright ban unless absolutely necessary.

As previously stated, this blog post is not intended to provide an exhaustive list of considerations for your organization’s AI use policy. Each organization will have different needs and considerations, which must be discussed by the appropriate decision-makers. Our suggestions should be read as a starting point and not be construed as offering advice on legal obligations that may be applicable to your organization. Please seek advice from an attorney for further guidance on your organization’s legal obligations.

1) Establishing Principles, Goals, and Frameworks

The AI use policy should align with the applicable frameworks, principles, and goals that will underpin your organization’s comprehensive AI policy. Many organizations’ AI use policies will likely reference their comprehensive policies when it comes to the overarching framing of AI usage. However, there may be some values that are specific to how employees should use AI in the workplace. For instance, if your organization has adopted the OECD’s AI Principles, then your AI use policy may reference certain principles like effective human oversight and accountability. Moreover, some legal frameworks generally require organizations to educate their workforce on AI usage, like the EU AI Act. An AI use policy can help organizations articulate at a high-level how it intends to prepare their employees on AI usage.

Guiding Questions:

- What AI frameworks will be applicable to your organization’s use of AI?

- What are your organization’s goals with allowing your workforce to use AI?

- What specific AI principles align with your organization’s values?

2) Determine Your Policy’s Scope

As noted above, an AI use policy is a subset of your organization’s comprehensive AI policy. Unlike the comprehensive AI policy, the use policy will play an important role in your workforce’s day-to-day operation. Your organization’s use policy may focus on a certain category of AI, such as Generative AI. It is also important to consider who is covered by the use policy and what type of operations are implicated. For instance, the use policy may be applicable to all areas of the workforce, such as using generative AI to assist in drafting emails. In other cases, the policy may apply to specific teams, such as how AI can be used to help generate code by technical teams. Your use policy may also vary by location. For example, there may be aspects of the use policy that only apply to employees in the EU. It is important that each section of the use policy clearly defines what AI models are implicated, as well as who is covered, to avoid confusion.

Guiding Questions:

- What AI systems will fall under the use policy?

- What aspects of the use policy will apply generally to your organization’s workforce?

- How will your use policy address specific AI usage by certain teams?

3) Consider Your Audience

Each organization has a diverse workforce with a wide range of skills and expertise. When drafting your organization’s use policy, consider how the language and substance will be understood by your employees. You should also account for your workforce’s technical sophistication to appropriately tailor the language and tone of your AI use policy. Your workforce’s experience with AI will vary and some employees may not be familiar with AI-specific terminology or concepts, such as “large language models” or “AI incidents.” While workforce training will be key to closing some of these gaps, it is important that your use policy is written in plain language, concise where possible, and includes the appropriate level of context depending on the topic(s) being covered. Providing guidance that is appropriate to your workforce’s expertise can reduce the likelihood of employees violating your AI use policy.

Guiding Questions:

- Who is the intended audience of your use policy?

- What stakeholders were consulted during the drafting of your organization’s use policy?

- How does your use policy define AI-specific terms or concepts?

4) Whitelisting or Blacklist?

As your organization begins to set out its AI use policy, it needs to consider the process for permitting AI use by its workforce. There are two approaches to take in this regard: blacklisting and whitelisting. Blacklisting takes the stance that all AI models or applications are approved for use with the exception of certain models or applications. When deciding which models or applications to blacklist, consider criteria such as the model or application’s developer, country of origin, and availability of public documentation. This criteria is important because not every AI developer is committed to responsible AI and the added diligence can help mitigate associated risks with using such models or applications. Whitelisting is effectively the inverse; the default position is that only certain AI models or applications are approved for use and other models or applications cannot be used for work purposes. When thinking about whitelisting criteria, your organization should consider the model or application’s ability to address a critical need or gap, existing pervasiveness among your workforce, as well as known or reasonably foreseeable risks. Organizations may use a combination of both a white and blacklist to outline certain prohibited systems, as well as preferred or recommended tools/platforms for certain use cases.

Guiding Questions:

- What concerns does your organization have about allowing your workforce to use AI?

- To what degree does your organization want to allow widespread use of AI by your workforce?

- Does your preferred approach allow your organization to effectively monitor AI usage by your workforce?

5) Workforce Training

Introducing employees to new policies and procedures will require an adjustment period for them to understand and internalize these guidelines. A key part of the implementation process is to create training modules to help your workforce understand and acknowledge your new use policy. Your organization should implement AI-specific training for new hires, as well as ongoing workforce training to communicate updates to your use policy. Moreover, your organization should ensure that its technical teams are trained on responsible AI development and design practices. As your organization develops recurring training, it should consider changes in the broader AI policy landscape to keep your employees informed and up-to-date. The level of detail should be appropriately tailored to the size and sophistication of your organization.

Guiding Questions:

- How often should we offer training to our workforce on our use policy and broader developments in the AI policy landscape?

- How will training be tailored to match the needs of specific teams within our organization?

- How will we assess whether our employees understand our AI use policy?

6) Code of Conduct

Your AI use policy should set clear expectations about your employees responsibilities as they use permitted AI models and applications. Employees should understand that AI is not always accurate and they should review outputs before using or relying on them. Errors stemming from your workforce’s use of AI are their responsibility, unless they previously raised concerns about the model or application. Employees should also be aware that their use of AI are subject to your organization’s other existing policies, even if they are not AI-specific (i.e., harassment or non-discrimination)

Guiding Questions:

- What process is in place to guide employees as they review AI outputs?

- How does your organization educate its employees on AI issues that may violate other workplace policies?

- How does your use policy address errors by employees that use AI?

7) Data Concerns

One of the key risks when using AI is concerns over data privacy and protection. Your organization will likely have a separate data privacy and protection policy, which should be considered when drafting your AI use policy. As your organization thinks about how your workforce will use AI, you should be clear about what input is appropriate for your approved AI models or applications, as well as the harms that could stem from inappropriate inputs. For instance, employees should not input confidential information, such as a company’s financial data, into an AI model or application.

Guiding Questions:

- What data is prohibited from being used as input?

- How can employees learn to draft prompts that avoid oversharing information?

- What risks can stem from inputting sensitive or confidential information into an AI model or application?

8) Handling Disclosures

As your organization introduces its workforce to AI, you should consider under what circumstances your organization or employees will disclose internal AI uses. Disclosing when and how your workforce uses AI should align with your organization’s underlying values, such as being transparent or building trust. Disclosures can be internal (i.e., employees disclosing to other employees) or external (i.e., employees disclosing to external stakeholders). When deciding which AI models or applications are permitted for use by your workforce, your organization should also consider the degree of transparency provided by the organization’s that developed the model or application.

Guiding Questions:

- Under what circumstances should employees disclose to others in the organization that AI was utilized?

- What type of disclosure could be provided for external content created by employees using AI?

- How will your organization disclose its choice of AI models or applications?

9) Outlining Risks and Benefits

As AI models or applications are being approved for use by your workforce, you should clearly communicate the risks associated with these approved AI tools. Understanding the risks can help your employees use the technology more responsibly, as well as better identify instances of misuse. For instance, employees should understand the unique cybersecurity risks posed by AI models and applications. Such risks include creating malicious outputs that can harm your organization’s technical infrastructure or manipulating employees to provide sensitive information (e.g., social security numbers or bank account information). At the same time, your employees should also understand the range of benefits that permitted models or applications provide at an individual and organization level. This could include automating repetitive tasks to allow employees to focus on more substantive operations or providing an added level review for quality assurance.

Guiding Questions:

- How can your organization communicate risks associated with approved AI models or applications to your workforce?

- How can your organization ensure that the benefits of approved AI models or applications are understood and realized?

- How will your organization ensure that new risks or benefits are clearly communicated to your workforce?

10) Identifying Issues

Providing avenues for feedback will be critical to ensuring that permitted AI tools are functioning as intended. Moreover, AI laws like the EU AI Act, require organizations to have processes in place to document AI incidents. This means that your organization will need to implement a process for your workforce to understand how to identify when issues or misuse occur, as well as how to submit formal or informal feedback. Your AI use policy should identify what that process will be, as well as what mechanisms are in place to appropriately escalate AI issues or misuse when they arise.

Guiding Questions:

- How are employees trained to identify possible incidents that may arise from your approved AI models or applications?

- What intake process is in place for employees to report AI incidents?

- How will your organization communicate to its workforce about identified AI incidents and their impact(s)?

11) Quality Assurance and Access

Your organization may permit a number of AI tools depending on its size or scale. Alternatively, you may want to experiment with or implement new AI models or applications for your employees to use. An effective use policy should offer sufficient guidance on how employees can request access to existing AI tools or propose new AI use cases. Access controls on existing AI tools allow the organization to increase oversight and accountability for those employees using the tools. In addition, when new or proposed use cases arise, employees will have a clear understanding of the approval process. As with existing AI tools, novel AI use cases should implement access controls to safeguard against inappropriate or unintended use.

Guiding Questions:

- How is permission granted or denied for existing AI tools or proposed use cases?

- What information needs to be submitted for a proposed use case?

- What team or individual is responsible for approving access to AI tools and proposed use cases?

12) Enforcement

While it is important for your organization to have a thorough AI use policy, it is equally important to understand how it will be enforced. Ensuring that your workforce is held accountable for violations of the use policy is critical to its success. Your use policy should outline a clear set of consequences for known violations and a process for employees to appeal such misconduct. Your workforce is responsible for understanding not only the contents of your AI use policy, but also that they will be held accountable for non-compliance.

Guiding Questions:

- How are instances of non-compliance with the use policy escalated?

- How are consequences allocated based on the degree and repetition of non-compliance?

- How will your organization monitor for non-compliance with the use policy?

–

Developing AI policies for your organization can be overwhelming. Trustible’s Responsible AI Governance Platform helps organizations develop their AI policies by leveraging a library of templates, guiding questions, and analysis tools to ensure their policy is aligned with their values, compliant with global regulations, and equipped to manage risks.

To learn more about how Trustible can enable your organization to develop and implement a robust AI governance program, contact us here.