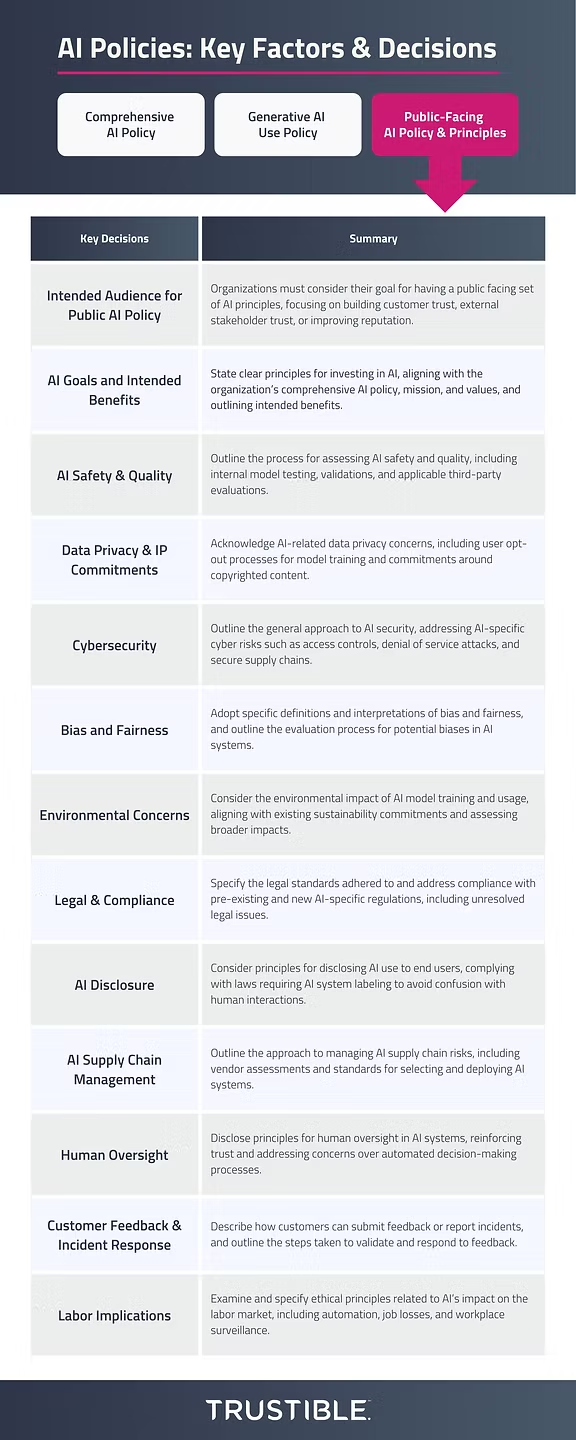

In our final blog post of this AI Policy series (see Comprehensive AI Policy and AI Use Policy guidance posts here), we want to explore what organizations should make available to the public about their use of AI. According to recent research by Pew, 52 percent of Americans feel more concerned than excited by AI. This data demonstrates that, while organizations may understand or realize the value of AI, their users and customers may harbor some skepticism. Policymakers and large AI companies have sought to address public concerns, albeit in their own ways.

Policymakers have reacted by proposing or enacting laws to regulate AI. Meanwhile, AI companies have begun building out AI governance functions and programs to ensure their AI tools are being developed and deployed responsibly. Yet, despite the steps taken to assuage concerns over AI, there remains a trust gap between companies and the public over their AI systems’ safety, ethics, and regulatory compliance.

Many organizations are seeking to build more trust by making their tAI principles and policies publicly available. We call this an organization’s public AI policy, and it’s often meant to provide transparency into the AI governance operations of the organization. While some may see these public policies as a way to manage an organization’s reputation, there can be serious legal and regulatory ramifications if a company does not adhere to its own public AI policy.

In this blog post, we cover 13 different decisions organizations need to make when drafting and publishing their public AI policies, as well as provide some guidance to help organizations start drafting theirs.

As previously stated, this blog post is not intended to provide an exhaustive list of considerations for your organization’s public AI policy. Each organization will have different needs and considerations, which must be discussed by the appropriate decision-makers. Our suggestions should be read as a starting point and not be construed as offering advice on legal obligations that may be applicable to your organization. Please seek advice from an attorney for further guidance on your organization’s legal obligations.

1) Intended Audience for Public AI Policy

Organizations must consider what their goal is for having a public facing set of AI principles. For organizations building and commercializing AI products or services, the public facing policy can help build customer trust, and the end goal is to increase revenue and customer outcomes. Organizations that do not directly commercialize AI products or services may have other goals, such as building trust with external stakeholders (e.g., investors) or improving your organization’s reputation with the broader public. These goals are not mutually exclusive, but understanding which ones are most aligned with your overall AI goals will help determine which principles to focus on, and the level of detail required for your public AI policy.

Guiding Questions

-

Who is the target audience for our public AI policy?

-

Do we want to directly commercialize AI products/services now or in the future?

-

How detailed do we want to be with our public AI policy?

2) AI Goals and Intended Benefits

AI is a fast moving, highly capable, technology that comes with unique risks and potentially high costs. An organization’s public AI policy should state some clear principles for why the organization is choosing to invest in AI, and how that impacts their other ethical AI principles. This should align with the organization’s comprehensive AI policy, mission, and stated values. AI may be used to deliver new product features or capabilities, optimize internal processes, or even to create a more diverse and inclusive work environment. In specific sectors, the goals may focus not only on the organizational benefits of the AI system deployer, but also on the benefits to those affected. For example, in education, a school system could benefit operationally from AI tools, which frees up teachers and staff from tedious tasks and in turn benefits the students.

Guiding Questions

-

Is our adoption of AI primarily to enhance our organization?

-

Why do we think AI can help our organization’s core mission?

-

Are there specific types of AI systems or uses that our organization will not develop?

3) AI Safety & Quality

Organizations should outline their general process for assessing AI safety and quality. This can range from discussing internal model testing and validations, disclosing how go/no-go decisions are made, or outlining applicable 3rd party evaluations. Even high-level details about what steps are involved can help build trust with non-technical audiences. Organizations may also want to highlight sector specific harms that they test for, or consider, as part of their safety and AI quality commitments, as well as describing how they monitor for new risks and harms that emerge over time.

Guiding Questions

-

What steps do we have in our AI quality control process?

-

What are some of the specific harms that we look for in our process?

4) Data Privacy & IP Commitments

There are many AI-related data privacy concerns that organizations may want to acknowledge. While many of these issues may be covered under existing public privacy policies, there are some AI specific concerns worth mentioning. For example, a clear process for how a user may ‘opt-out’ of having their personal data used for model training, or how a content creator can assess whether their copyrighted material is being used inappropriately in the model.

Guiding Questions

-

Do we allow people to opt-out of their data being used for model training?

-

What are our commitments around copyrighted content?

5) Cybersecurity

While the field of AI security is quickly evolving, organizations may want to outline their general approach to AI security. While some of these processes may be described in an organization’s privacy policy, there are AI-specific issues worth addressing. AI-specific cyber risks may include ensuring appropriate access controls for models, preventing denial of service attacks against critical AI systems, or ensuring models have a secure supply chain. Organizations should align their AI security policy with existing public-facing security commitments.

Guiding Questions

-

What AI specific security controls do we have in place?

-

How do we protect our data and models from unauthorized access or use?

6) Bias and Fairness

AI systems raise rightful concerns over bias and fairness. However, there is no consensus on what constitutes bias and fairness. Organizations may want to adopt specific definitions and interpretations of bias and fairness when assessing AI systems. This is particularly true when relying on historical datasets, as AI models can easily perpetuate stereotypes, discriminate unfairly against certain groups, or misrepresent things.

Guiding Questions

-

What definitions or bias and fairness do we use?

-

How are AI systems evaluated for potential biases?

7) Environmental Concerns

AI model training and use can come with environmental concerns that may intersect, or conflict with, with an organization’s existing sustainability commitments. Even an organization that does not directly train AI systems may have an environmental impact from heavy usage of its existing systems. Environmentally focused organizations may want to consider additional environmental impacts from lower in the AI supply chain, such as rare mineral harvesting, or resource exhaustion. Several Environmental, Social, and Governance (ESG) metric reporting frameworks are looking to scope AI issues into their metrics and may help organizations in assessing their own AI environmental impacts.

Guiding Questions

-

How will AI impact our sustainability goals?

8) Legal & Compliance

While there are several regulations recently proposed or enacted targeting AI specifically, many pre-existing laws still apply to AI driven systems. There are several unresolved legal issues around use of copyrighted data for model training, liability for automated decisions making, and intellectual property protections for AI. Organizations may want to specify the legal standards that they will adhere to, or address how they are generally tackling compliance with these laws.

Guiding Questions

-

How does your organization handle use of copyrighted data in AI models?

-

What are the terms and conditions for your AI services?

9) AI Disclosure

AI can be used in a wide variety of ways ranging from simply providing analytical scores about data, to powering an interactive chatbot/agent that directly interacts with humans. A lot of current algorithms or other software automation can be powered with AI behind the scenes to provide additional capabilities. Organizations may want to consider what principles they want to publish around disclosing AI use to end users. Notably, some laws (e.g., the EU AI Act) requires AI chatbots or agents to be labeled as such to avoid confusions by users for actual humans.

Guiding Questions

-

Will you always disclose when an AI system is being used?

-

How will you disclose this information?

-

How can users opt-out, or appeal the results of any automated decision making process?

10) AI Supply Chain Management

AI systems have complex supply chains, which raise upstream and downstream concerns. Upstream issues may include where data sources for foundational models. Downstream issues could include how your organization’s vendors manage the data that you put into their AI systems. Managing the third-party risks related to AI is a challenge that many organizations are struggling with, especially as AI is introduced to pre-existing vendor tools. Organizations may want to outline their general approach to selecting which AI systems they build or deploy, as well as how to mitigate AI related risks in vendors they work with.

Guiding Questions

-

What vendor assessments are done?

-

What standards or principles does your organization hold your vendors to?

11) Human Oversight

AI systems have a range of deployment patterns ranging from systems with heavy human involvement (i.e., human-in-the-loop), to systems that are fully autonomous. There can be ethical, legal, and performance concerns with different deployment types, and some organizations may have specific principles for what amount of human oversight they require for different systems. Disclosing these principles at a high level can help an organization reinforce trust, as well as address concerns over highly automated decision making systems.

Guiding Questions

-

Will humans always be in the loop for your AI systems?

-

How will you ensure the humans in the loop have sufficient expertise in the subject matter area?

12) Customer Feedback & Incident Response

An organization’s public facing AI policy should generally describe how customers can submit feedback or report incidents, as well as steps that may be taken to validate and respond to that feedback. While there has been a lot of recent progress on enhancing the robustness and reliability of AI systems, there are still numerous opportunities for system error. Many organizations have multiple layers of internal monitoring to detect potentially malicious inputs, log scanning, and performance metrics. Additionally, certain AI regulations require organizations to implement an external monitoring system. External monitoring includes ensuring that AI system customers or users are able to easily report issues with the system and the system provider appropriately investigates the issue.

Guiding Questions:

-

How can users report feedback or failures about an AI system?

-

What steps will be taken to validate and assess these failures?

13) Labor Implications

AI’s pervasiveness has raised concerns about its impact on the labor market. These concerns include automation and job losses, conflicts with content creators, as well as workplace surveillance issues. Organizations may want to examine and specific what ethical principles they are seeking to uphold related to AI-labor issues.

Guiding Questions

-

What role will automation play in our products / services?

–

Even with this guidance on key factors and decision-points, developing your organization’s AI Policy can be overwhelming. Trustible’s Responsible AI Governance Platform helps organizations develop their AI policies by leveraging a library of templates, guiding questions, and analysis tools to ensure their policy is aligned with their values, compliant with global regulations, and equipped to manage risks.

To learn more about how Trustible can enable your organization to develop and implement a robust AI governance program, contact us here.