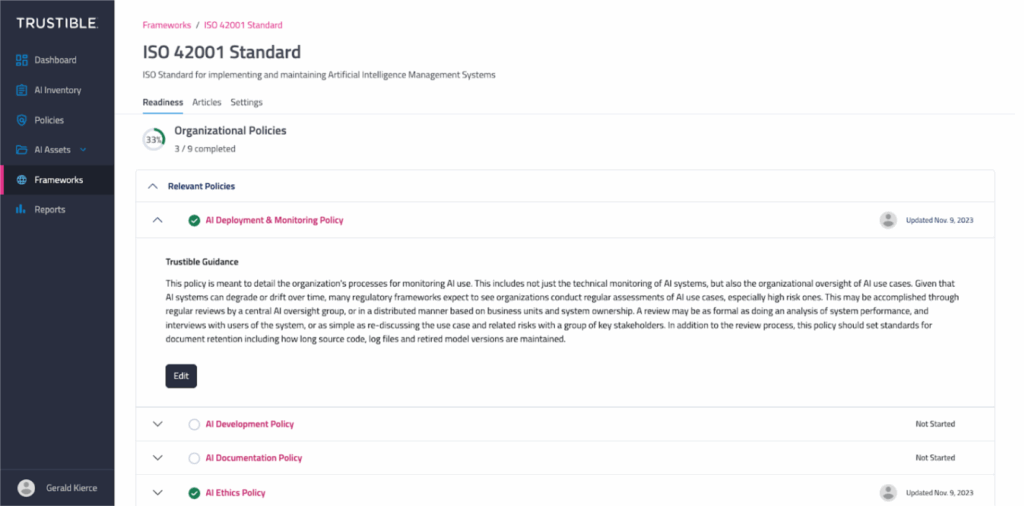

Today, we’re excited to announce that we are now offering customers access to ISO 42001 Standard within the Trustible platform – becoming the first AI governance company to offer the standard on its platform.

ISO 42001 is positioned to be the first global auditable standard designed to foster trustworthiness in AI systems by setting a baseline governance for all AI technologies within an organization. This voluntary standard encourages organizations to establish a robust AI management system, developing comprehensive policies, procedures, and objectives that ensure the responsible development and deployment of AI.

ISO 42001 adds to the existing frameworks library that our customers can leverage on the Trustible platform, including the NIST AI Risk Management Framework, the EU AI Act (latest form), Colorado Regulation 10-1-1, and more. Our mission remains to continue to make it easy for customers to adopt new and existing frameworks that demonstrate trust and manage risk for their organizations.

Key Features of ISO 42001 in Trustible’s Platform:

- Documentation – What information about the AI management system must be documented, reviewed, and approved?

- AI Policies – What AI policies need to be in place to protect our organization, users, and society?

- Risk and Impact Assessments – How do we assess and mitigate potential risks and harms posed by AI systems?

- Collaboration – How can we work with colleagues in different teams and communicate the work amongst our stakeholders?

- Insights – How do I know what risks, benefits, or compliance obligations may exist across my AI systems and jurisdictions I operate in?

Trustible’s integration of ISO 42001 caters to organizations of all sizes, providing them with a scalable and detailed roadmap towards compliance with this emerging AI safety standard. While ISO 42001 remains voluntary, its adoption through Trustible’s platform enables customers to demonstrate trust to stakeholders and get ahead of the emerging regulatory environment.

Ready to learn more?