On December 9, 2023, European Union (EU) policymakers reached an agreement on the proposed Artificial Intelligence (AI) Act, which sets the stage for the EU to pass the AI Act as early as January 2024. The impending vote on the compromise legislation marks a significant development in the global AI regulatory landscape; one that American companies cannot afford to ignore.

While the eventual law will directly apply to EU countries, its extraterritorial reach will impact U.S. businesses in profound ways. A US-based company will not be immune to the obligations imposed by the upcoming Act. American-based businesses producing AI-related applications or services that either impact EU citizens or supply EU-based companies will be responsible for complying with the EU AI Act. This is not unlike what U.S. companies are currently subject to under the EU’s General Data Protection Regulation (GDPR).

What to Expect: Unpacking the Implications

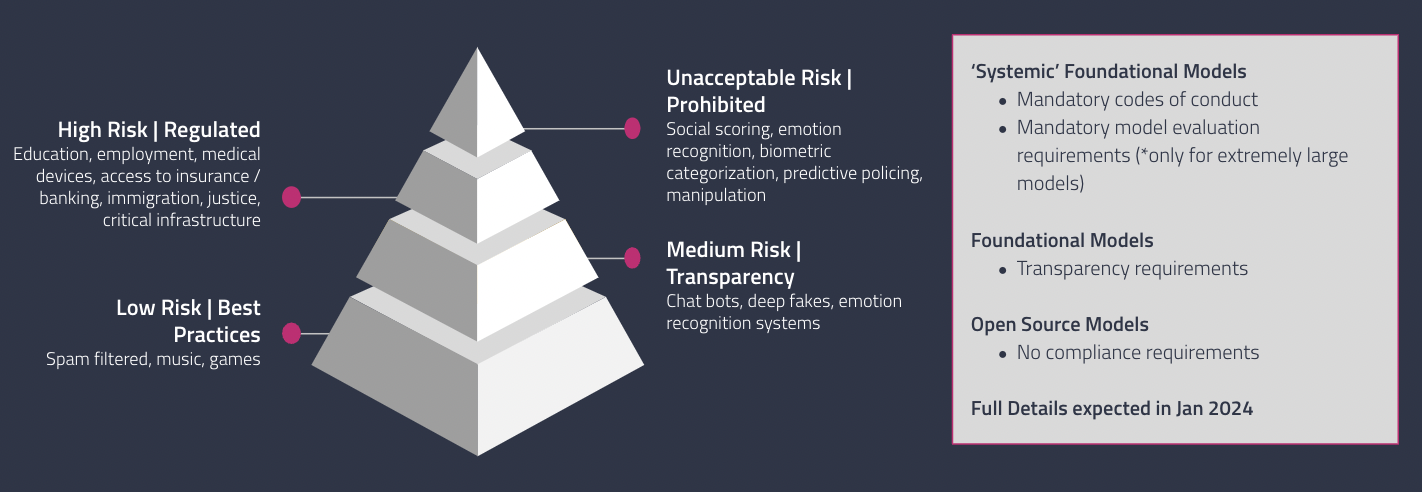

The EU AI Act is the first major regulatory framework of its kind and categorizes AI applications into risk-based tiers. Those companies deploying AI for high risk use cases will need to comply with stringent requirements under the AI Act. This includes:

- Detailed risk management policies;

- Documentation requirements across the AI deployment lifecycle;

- Transparency and human oversight obligations; and

- Elevated standards for accuracy, robustness and cybersecurity.

Any company with EU operations – including American-based ones – face substantial fines of up to 7% of annual revenue or €35m for noncompliance. However, the Act’s influence does not stop at businesses with a European footprint.

Companies without direct EU operations may be swept up by the broad reach of the AI Act simply for being within the supply chain of EU-based companies. For instance, purchases across the AI supply chain may demand proof of compliance – particularly in highly regulated industries such as finance, manufacturing, and government services. The EU AI Act also delegates some responsibility to European Standards Organizations (ESOs). Companies should expect these ESOs to establish formal controls in the near future that may become the de facto international standards. Costs to retrofit AI technologies to fit these standards will only increase the longer developers wait. Moreover, in the wake of expected passage, the AI Act may cause other countries to enact similar regulatory schemes, such as how the EU’s GDPR launched a wave of similar privacy regulations.

How to Prepare: Tactical To-Do’s for American Companies to Get Ahead of the EU AI Act

Given the scale and scope of AI technologies, as well as the cross-border reach of the EU’s AI regulations, U.S.-based companies should consider their position in the global AI supply chain (i.e., supplying components or services for AI systems) and how their customer relationships in the EU, as well as their business operations, will be impacted by the EU AI Act. In the interim period of time before the EU AI Act comes into effect,American companies with business relationships or operations in the EU can take some proactive initial steps to stay ahead of the regulatory curve. While these initial actions are simple, particularly for the many non-high risk applications, building an infrastructure of appropriate practices now will save many headaches down the line. But what exactly do those initial steps look like?

1. Understand Where Your Company is Using AI:

Understanding the scope of what constitutes AI under the EU AI Act is the critical first step to knowing how and where your company uses AI. The compromise text of the EU AI Act aligns with the Organisation for Economic Co-operation and Development’s (OECD) AI definition and would include software that is developed with:

- Machine-learning (supervised, unsupervised, reinforcement, and deep learning);

- Logic and knowledge-based approaches (knowledge representation, inductive/logic programming, knowledge bases, inference and deductive engines, symbolic reasoning, and expert systems);

- Statistical approaches (including Bayesian estimation as well as search and optimization methods). This definition covers a wide array of AI applications, from the latest GenAI Chatbot to remote process automation.

By understanding the breadth of AI applications covered by the EU AI Act, you can begin to identify and inventorize which parts of your operations could be impacted by the Act.

2. Classify the Risk Level for Each AI Use Case

Similar to other existing and emerging AI management frameworks (e.g., NIST’s AI Risk Management Framework), the EU AI Act categorizes compliance obligations for AI systems based on their risk of harm to the end user. High-risk AI include, but are not limited to, those use cases that involve medical devices, employment decisions, educational and vocational training, voting, accessing financial services or insurance, critical infrastructure, and criminal justice decisions.

In order to understand your organization’s regulatory burden, and allocate resources accordingly, it is crucial to overlay your AI inventory with a risk classification for each AI use case. For instance, AI tools used to assist your company with making decisions to approve or deny credit should be classified as “high-risk.” Use cases should be assessed on a regular basis to determine whether a once low-risk use case has transitioned to a high-risk use case. For example, the same predictive maintenance model’s risk level might change if applied to critical infrastructure. Regular tracking and assessment of where and how AI is applied ensures that you are continually aware of the risk levels associated with your AI use cases and can adjust your compliance obligations as necessary.

3. Ask Your Vendors for Their EU AI Act Compliance:

Under the EU AI Act, all suppliers within the AI supply chain can be held liable for compliance. It is essential that you ensure your vendors are also compliant with the EU AI Act as non-compliance can have significant legal repercussions to your company. Companies should consider reviewing existing contractual requirements for their vendors to include AI governance provisions. This step will safeguard your company against potential liabilities and ensure that all parts of your AI supply chain comply with the relevant portions of the EU AI Act.

4. Train Staff Across Compliance, Policy, Legal, and Risk:

The implementation of a new regulatory scheme for AI systems will give rise to demand for AI-specialized risk management talent. There are two approaches for companies to consider with their existing and new employees. First, you should invest now in hiring staff familiar with AI technological risk management across compliance, policy, and legal functions. Second, you should design or recommend training programs for existing staff to get up to speed on developments in AI regulatory space, as well as to develop the necessary skills for AI risk management. These investments will prepare your team for current and future regulatory developments in AI governance.

5. Define a Simplified AI Policy:

The AI regulatory space will continue to evolve as new regulations are enacted and novel AI use cases are developed. While implementing robust policies and procedures will be a key component for compliance, having a simplified version of your AI policies is also vital. Maintaining a streamlined version of your organization’s complete AI policies and procedures can provide a clear framework for AI-related decisions and practices within your company. This policy should serve as a single source of truth to address questions from both employees and customers alike.

6. Invest in Adaptable AI Technologies:

The new EU AI Act requirements are complex and will require a high degree of collaboration throughout the organization to meet compliance obligations. Companies should consider the universe of technologies that exist to help simplify the compliance process. Investing in adaptable AI technologies that can be easily configured to address a changing and challenging regulatory landscape is essential. This investment will position your company to quickly adapt to new regulations, remain compliant, and maintain a competitive advantage.

What is Next: Act Now or Be Left Behind

The EU AI Act is no longer an aspirational political exercise and U.S. companies cannot afford to watch passively from the sidelines. Rather, the EU AI Act is a harbinger of the future of AI regulation that will require significant resources and strategic planning from businesses worldwide. For U.S. companies, the message is clear: proactive adaptation is better than reactive compliance. The tactical steps outlined above can begin to help U.S. companies proactively align with the EU AI Act and stay ahead of the regulatory curve.