Summary

- AI governance became operational this year, moving from principles and pilots to real production as enterprises deployed AI deeper into workflows, decisions, and customer experiences.

- Trustible delivered the foundation organizations needed for this shift, strengthening intelligence, collaboration, automation, and change-management capabilities so governance teams can run continuous, scalable programs and support faster AI adoption.

- Our customers gained unprecedented visibility and control, cutting AI footprint discovery time nearly in half, accelerating cross-functional reviews, and reducing manual governance workload with expert-driven automation.

- Year Two governance emerged as the new frontier, focused on monitoring drift, managing ongoing compliance, adapting to evolving regulations, and sustaining program health as AI systems and organizations grow more dynamic.

- Looking ahead, Trustible is preparing for the era of agentic AI governance, introducing an orchestration layer that detects meaningful signals, highlights emerging risks, suggests interventions, integrates across existing risk and tech stacks, and empowers every stakeholder to contribute clearly and confidently.

A Look Back at 2025

In 2025, Trustible delivered the continuous, scalable programs needed for faster AI adoption at the same time that AI governance itself was shifting from principles and pilots to real production.

Our strengthened intelligence, collaboration, automation, and change management capabilities helped enterprises deploy AI deeper into workflows, decisions, and customer experiences.

Outcomes included:

- Customers are gaining unprecedented visibility and control, cutting AI inventorying time nearly in half, accelerating cross-functional reviews, and reducing their manual governance workload with expert-driven automation.

- AI systems and organizations are growing more dynamic as governance has emerged as the new frontier, focusing on monitoring drift, managing ongoing compliance, adapting to evolving regulations, and sustaining program health.

Preparing for the era of agentic AI governance, we are introducing an orchestration layer that detects meaningful signals, highlights emerging risks, suggests interventions, and integrates across existing risk and tech stacks, all while empowering every stakeholder to contribute clearly and confidently.

As principle-driven frameworks and controlled pilot projects moved rapidly into production, a decisive shift in the enterprise AI landscape occurred. AI now touches core workflows, customer interactions, and high-stakes decisions. Autonomy is increasing, risks are more complex, and expectations from regulators and boards are rising just as fast.

Clearly, governance is no longer optional; it’s an operational capability that organizations must run, measure, and improve the same way they run cybersecurity or traditional GRC.

Trustible was built for this moment. Our mission has always been to safely accelerate enterprise adoption of AI. Over the past year, we’ve translated that mission into capabilities that teams draw tangible value from every day. As customers shifted from governance theory to governance practice, we focused on the four areas where organizations most often struggle: intelligence at scale, collaboration, automation, and change management.

1. Intelligence at Scale

The challenge: Turn episodic assessment into a continuous, informed process.

AI governance is inherently cross-functional. It touches legal, security, risk, data, engineering, procurement, privacy, compliance, operations, and product — often in a single use case review. But in most organizations, these teams don’t share a common workspace, process, or language. That lack of structure creates friction, delays, and confusion over both ownership and process.

Customers repeatedly told us the same thing: getting people involved wasn’t the hard part. It was aligning those people around a coordinated workflow. Tasks arrived out of sequence and ad hoc, stakeholders were overloaded with information they didn’t need, and ownership was unclear. Even motivated organizations struggled to maintain momentum across teams.

This problem isn’t about willingness; it’s about infrastructure.

How we addressed the challenge:

To give organizations the clearest possible view of their AI landscape, we strengthened the Trustible platform’s intelligence layer with:

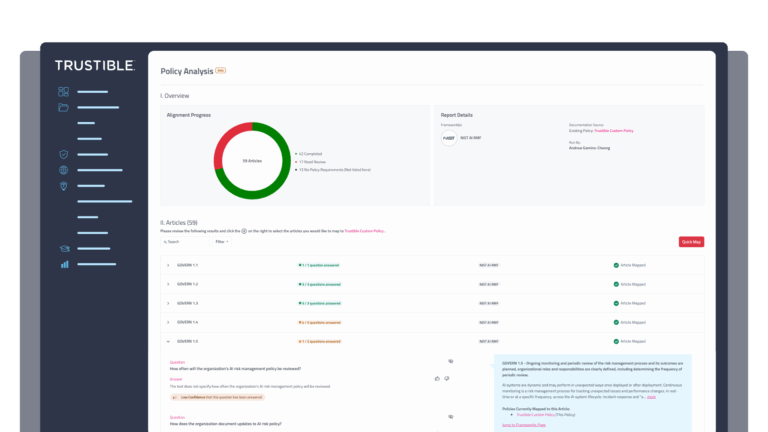

- Additional framework support, including for the Singapore Model AI Governance Framework, Databricks AI Governance Framework, U.S. National Security AI Framework, and others.

- A unified risk and mitigation model that captures inherent and residual risk shows how mitigations map across multiple threats and highlights remaining exposure.

- A structured Model Evaluations module that supports documentation, testing, validation, and performance tracking over time, enabling real governance of model behavior.

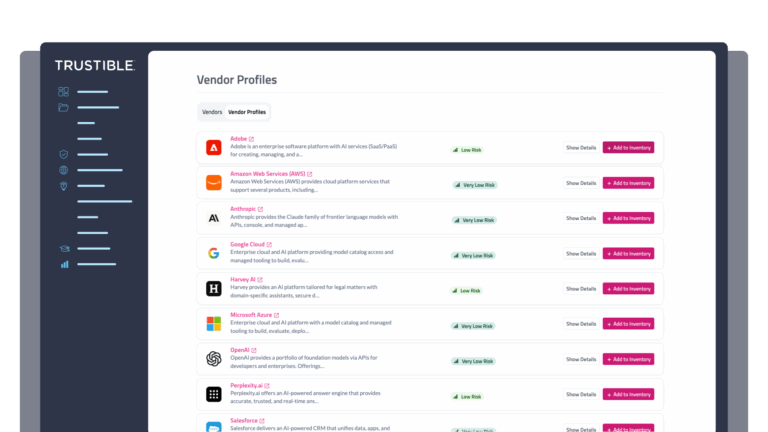

- Expanded Vendor Intelligence, with category-level scoring, transparency into scoring logic, improved documentation, and a redesigned vendor workflow.

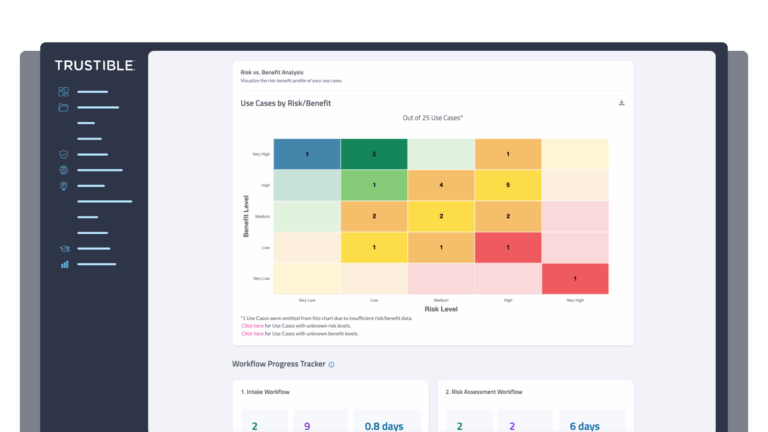

- A more powerful dashboard and reporting experience that surfaces insights across risk posture, AI deployment activity, departmental adoption, workflow performance, and benefit analysis.

- Flexible filtering across dashboards, inventories, and reports, giving teams the ability to quickly segment their AI footprint by any attribute, pattern, or linked asset and support executive visibility on an organization’s AI outcomes.

The outcome:

- Organizations reduced the time required to compile a full AI inventory by 40–60% after centralizing use cases, models, and vendors into Trustible.

- Governance teams identified high-risk or poorly documented use cases 2–3x faster thanks to standardized residual-risk scoring and mitigation mapping.

- Model and vendor evaluations became more complete and audit-ready, with 30–50% fewer documentation gaps compared to pre-Trustible processes.

- Several teams uncovered redundant and overlapping use cases during onboarding, ultimately reducing duplicative use cases by 10–15% in their first year.

2. Collaboration

The challenge: Build an efficient, cross-functional infrastructure

Before organizations can govern their AI, they need to know what exists, who owns it, how it works, and what it touches. Seems obvious, but use cases emerge organically, teams experiment on their own, third-party vendors quietly introduce AI into products while providers constantly change capabilities.

Challenges from outside the enterprise are just as great. Many organizations don’t have the resources to keep up with changes to regulations, standards, legislative action, emerging risks, AI incidents, legal cases and more.

A fragmented operational landscape stalls effective governance due to lack of clarity. This uncertainty slows decision-making, makes leaders nervous, confuses teams, and blocks progress on the very controls and safeguards organizations want. Our customers describe it as “trying to govern in the dark.” Without capacity, without embedded intelligence, governance becomes reactive and often implemented too late.

How we addressed the challenge:

We invested in making Trustible the place where governance work gets done collaboratively. Key improvements included:

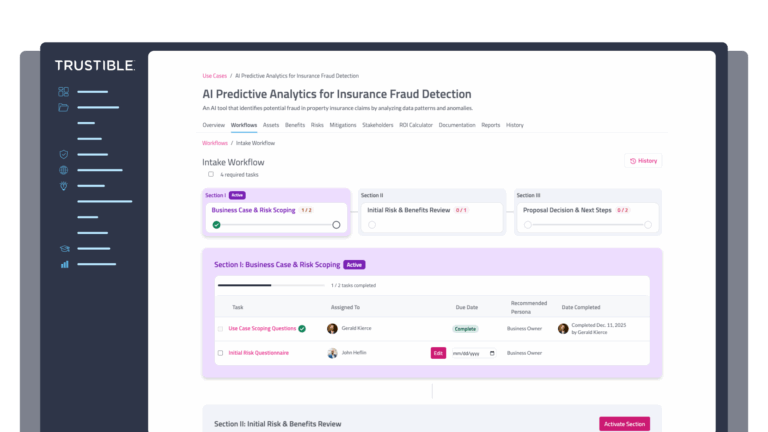

- Task groups that enforce a logical, sequential workflow, ensuring stakeholders act at the right time, with the right context, and without receiving premature notifications.

- A simplified contributor experience that limits what each user sees to only what they need to complete their tasks, preventing overwhelm and reducing friction, and for access control purposes, ensuring users only see data relevant to their roles.

- A structured departments system that improves reporting, ownership clarity, and visibility across business units.

- Redesigned vendor assessment and use case intake workflows that support internal and external participation while making risk scoring more transparent and repeatable.

- Identity and access improvements, including better user management, contributor invitations, refined permissions, SSO & SAML 2.0 support, and branded organizational settings.

The outcome:

- Cross-functional task completion times improved by 35–50% once task groups were enforced and contributors only received notifications when work was actually ready.

- Contributor confusion and rework decreased by up to 70% after role-specific views limited users to the tasks and context they needed.

- Vendor assessments that previously spanned multiple weeks were completed faster under the redesigned workflow and clearer scoring categories.

- Governance programs onboarded new business units twice as fast using departments and more structured ownership metadata.

3. Automation

The challenge: reduce processing, increase evaluation

Many teams, even with strong governance, struggle under the operational burden. Intake feels repetitive. Documentation requires repeatedly chasing down the same details. Vendor reviews mean sifting through long questionnaires. Periodic reviews get lost in calendars. And governance teams spend most of their time processing information rather than evaluating it. Customers repeatedly told us: “We spend more time handling the mechanics of governance than doing governance itself.”

But there is a path forward: governance teams spend a disproportionate amount of time on use cases that are familiar, predictable, or low-risk — approx. 80 percent. These need oversight, but not bespoke analysis. Meanwhile, novel or complex cases, requiring additional governance, are deprioritized and delayed due to competing priorities and quick wins.

One customer offered an apt use case metaphor: “Governance should be a conveyor belt. The system should pick up everything, sort what’s familiar, and only hand the novel or high-risk items to us.”

Trustible classifies familiar patterns, pre-populates known attributes, applies standard mitigations, and elevates use cases that truly require human review. As cycle times shrink, consistency increases, and governance becomes sustainable at enterprise scale, the conveyor belt metaphor is realized.

How we addressed the challenge:

To reduce manual workload and help teams focus on what matters, we introduced or expanded:

- A more intelligent intake system that allows configurable fields, adds helpful guidance, and automatically completes tasks when all required details are already supplied.

- Review workflows that automatically recommend when a use case should be revisited, tied directly to the use case’s risk level.

- Workflow enhancements that reduce context switching, including automatic redirection to the next assigned task and consolidated risk and benefit assignment.

- AI Analyzer for AI-assisted document review, supporting both curated and custom question sets, multi-document analysis, and exportable reporting.

- Bulk upload capabilities and expanded APIs that allow organizations to automate intake, documentation, workflow creation, and system integration.

The outcome:

- 30–50% drop in intake cycle times thanks to auto-complete logic, next-task routing, and clearer guided documentation steps.

- 60–80% reduction in time spent on analysis of vendor compliance policies and Terms of Service thanks to AI Analyzer accelerating document review.

- Governance teams spent about half as much time on manual triage due to Trustible’s expert-driven automated risk scoring, surfacing only novel or high-risk items.

- Bulk import and API-connected workflows cut inventory setup time from months to days or weeks, depending on program size.

4. Change Management

The challenge: surface and quickly respond to change

Most organizations focus early governance efforts on intake. They build inventories, assess initial risks, publish baseline documentation, and establish review processes. As systems and processes change, policies may no longer fit business requirements. And regulations, as well as frameworks designed to help standardize governance work, also undergo regular changes and updates. When the ground shifts beneath you, you need to be prepared to respond.

“Year One governance” questions:

- What do we have?

- What risks exist?

- What regulations and standards do we need to comply with?

- What documentation is needed?

- What processes should we follow?

“Year Two governance” introduces a different set of questions:

- What changed?

- What drifted?

- What fell out of compliance?

- What needs re-evaluation?

- What’s new or emerging?

Clearly, organizations need governance systems that adapt in real time, not just at intake.

How we addressed the challenge:

To support this next stage of governance maturity, we strengthened the platform’s flexibility, clarity, and adaptability. Updates across the platform include:

- Clearer task guidance, conditional logic, and improved workflow design that help contributors provide accurate information and stay aligned over time.

- Updated risk taxonomies and scoring rules that reflect evolving standards and more nuanced real-world risks.

- Better visibility into review needs, status changes, and inventory health, ensuring teams don’t lose track of required updates.

- Improvements to navigation, session management, communication, and user interfaces to support broader organizational adoption and sustained engagement.

- A Use Case History view that provides a record of changes, supporting transparency and audit readiness.

The outcome:

- Ongoing review compliance increased by 2–3x once automated review workflows and inventory indicators were enabled.

- Drift, scope changes, and compliance issues were detected 50% earlier due to structured review cycles and clearer signals in dashboards and reports.

- Governance updates, such as taxonomy changes, new fields, or modified workflows, were rolled out 30–40% faster with fewer interruptions to teams.

- Organizations reported a 40–60% reduction in contributor support requests after improvements to guidance, workflow clarity, and task design.

What’s Next: Year Two & Agentic AI Governance

Across industries, organizations are reaching the end of their first major AI governance milestone: they’ve built inventories, established intake processes, and created foundational governance frameworks.

Year Two governance is about continuity. It’s understanding when models shift, when use cases expand beyond their intended scope, when vendors introduce new terms or capabilities, and when internal compliance begins to drift. It’s about embedding governance into the ongoing lifecycle of AI systems, not just at the point of creation.

At the same time, agentic systems are reshaping the enterprise AI landscape, introducing new classes of use cases, risks, and mitigations. As nearly every sector accelerates AI adoption, the “where” and “how” of governance is changing, too. In an environment defined by technology consolidation, platform sprawl, and the high cost of change management within large enterprises, teams need governance that fits seamlessly into existing tools and processes while still providing the expertise and intelligence required to scale safely.

Broader organizational adoption of AI governance means governance teams often spend critical time educating stakeholders who may not yet have the depth of knowledge, expertise, or vocabulary to clearly articulate how, why, or where risks may arise from a use case or vendor.

To meet those needs, we’ll soon introduce our vision for agentic AI governance: an orchestration layer that actively connects systems, people, processes, and signals across the AI lifecycle.

Instead of requiring governance teams to manually monitor every update, Trustible will focus attention on the signals that matter, detecting drift, highlighting non-compliance, suggesting interventions, escalating emerging risks, highlighting regulatory changes and inventory exposure, and automating actions when appropriate. These insights will surface across the tools and workflows where teams already operate.

We’ll also enable new ways to conduct AI governance: from anywhere in your risk stack, to anywhere in your tech stack, powered by Trustible’s intelligence and expertise. And a new capability supplementing this education work will enable stakeholders to clearly define their AI use cases with a single click.

This next chapter builds on the foundation we established this year: deeper intelligence, clearer collaboration, smarter automation, and resilient change management. Together, these capabilities create the conditions for a new kind of governance, one suited to a world where AI is more autonomous, more embedded, and more central to enterprise operations.

And we’re just getting started.