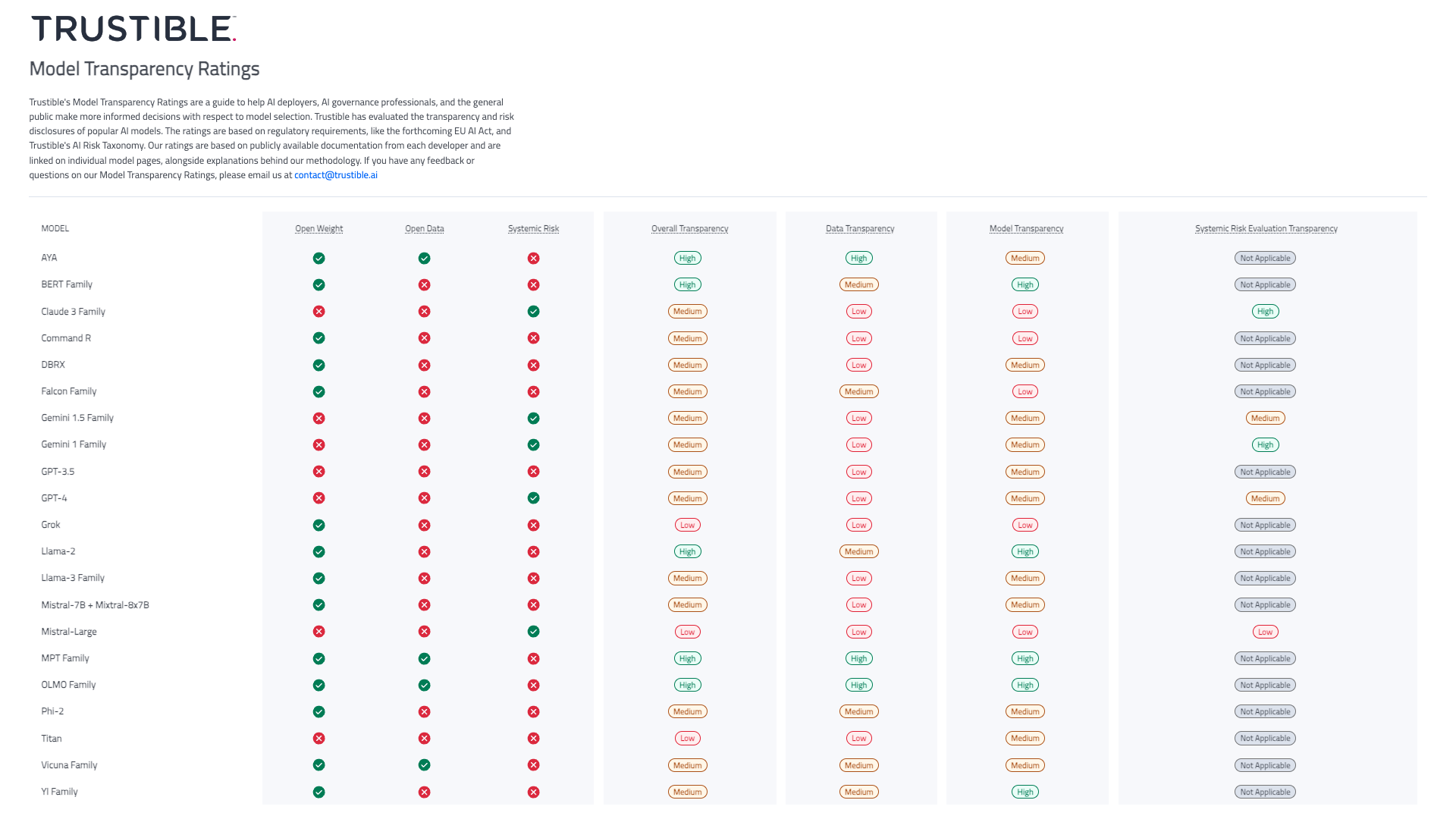

Trustible evaluates transparency disclosures of 21 of the top LLMs to enable organizational leaders with key insights for AI model selection.

WASHINGTON, April 23, 2024 /PRNewswire/ — Organizational leaders are looking to better understand what AI models may be best fit for a given use case. However, limited public transparency on these systems makes this evaluation difficult.

In response to the rapid development and deployment of general-purpose AI (GPAI) models, Trustible is proud to introduce its research on Model Transparency Ratings – offering a comprehensive assessment of transparency disclosures of the top 21 Large Language Models (LLMs).

The results from Trustible’s initial ratings reveal significant insights:

- As of this publishing date, most models would not comply with the EU AI Act GPAI transparency requirements.

- Data transparency continues to be a significant challenge, particularly with newer models.

- Open source models generally exhibit greater transparency, with all such models scoring at least ‘Medium’ on overall transparency. Examples include Olmo, Aya, MPT and Llama-2.

- Proprietary models, particularly those accessed through APIs or cloud deployments, typically provide less documentation clarity, posing challenges for comprehensive risk assessment. Examples include GPT-4, Claude 3, Mistral-Large, and Gemini 1.5

- These results underscore the urgent need for enhanced disclosure of training data sources and methodologies, which is essential for accurately assessing an AI model’s suitability for specific use cases. Trustible’s platform not only simplifies this evaluation process but also supports ongoing compliance as regulatory landscapes evolve.

The model ratings are available to the public via this website. To read the full methodology and evaluation criteria, please follow this link.

Selecting the appropriate LLM for a given use case is a critical decision that can contain both risk considerations and regulatory implications. Trustible’s Model Transparency Ratings assesses these GPAI LLM model families based on public documentation from sources like developers’ websites, Github, and HuggingFace. Each model is evaluated across 30 distinct criteria in alignment with the EU AI Act GPAI transparency requirements and assigned overall scores. The ratings – inspired by other transparency rating reports such as financial credit ratings or ESG scores – offer an accessible format to help organizational AI leaders make informed, responsible decisions regarding model selection considering both ethical and legal risks.

“With these ratings, we’re providing a lens through which organizational leaders can view potential risks and make decisions that align with both ethical standards and regulatory expectations,” stated Gerald Kierce, Co-Founder & CEO of Trustible. “Our goal at Trustible has always been to translate complex technical documentation into actionable business and risk insights.”

The new Model Transparency Ratings have also been directly integrated into the Trustible platform with additional functionality for customers.

Trustible commits to regularly updating its ratings to reflect new changes to both models and the regulatory landscape. The company also plans to expand its evaluations to cover additional model variants, risk categories, and other types of AI technologies.

About Trustible

Trustible is a leading technology provider of responsible AI governance. Its software platform enables legal/compliance and AI/ML teams to scale their AI Governance programs to help build trust, manage risk, and comply with regulations.

Media Contact

Gerald Kierce