The rapid evolution and adoption of AI requires organizations to adopt effective AI governance structures. An organization’s policies and procedures for responsible AI development and deployment are crucial for mitigating risks and ensuring ethical outcomes. A key aspect of AI governance involves soliciting the appropriate levels of feedback from relevant parties that interact with, manage, or are affected by an organization’s AI system, also known as “AI stakeholders.” Identifying these stakeholders are a necessary component for organizations that are conducting impact assessments for their high-risk systems, as required under certain laws like the EU AI Act. Additionally, guidance like the NIST AI RMF mentions the importance of engaging stakeholders to provide crucial feedback on how AI systems operate under real world conditions.

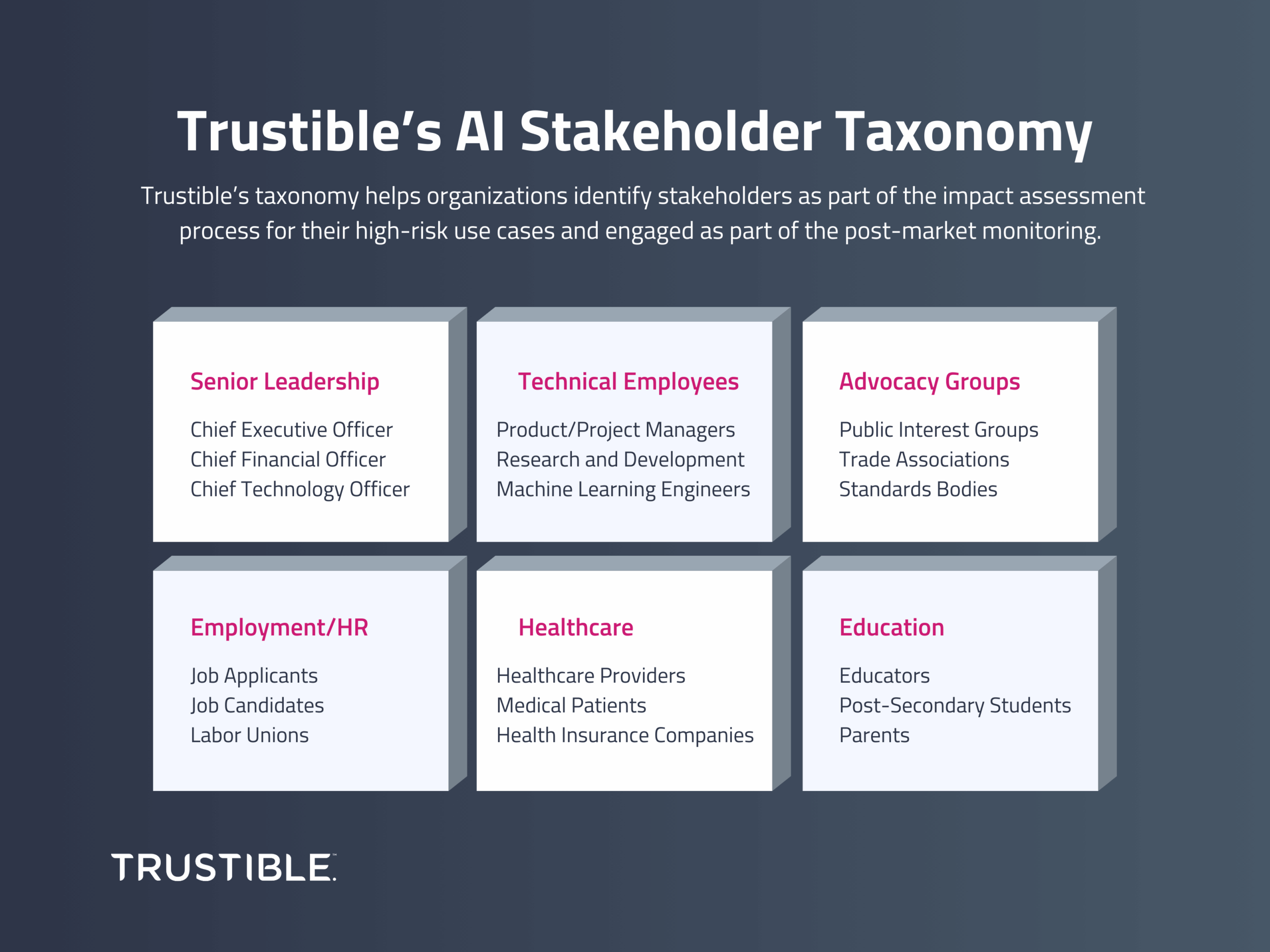

Despite a growing body of guidance and frameworks to help organizations manage their AI, it is not entirely clear how organizations can identify the relevant stakeholders or articulate their significance. In order to help organizations with this challenge, Trustible developed an AI Stakeholder Taxonomy that can help organizations easily identify stakeholders as part of the impact assessment process for their high-risk use cases, as well as track stakeholders who should be engaged as part of the post-market monitoring. The Trustible AI Stakeholder taxonomy also assists organizations with identifying the appropriate internal organization stakeholders that help mitigate impacts on affected stakeholders. Our guidance below is an initial framework for helping organizations understand who AI stakeholders are generally, their importance to the organization, as well as how to identify and engage them effectively.

Who are AI stakeholders?

In our Applied Ethical Framework White Paper, we looked at the 3 categories of stakeholders: People, Organizations, and Society. Generally, we understand those categories of stakeholders to mean the following:

- People: These are individuals affected by an AI system, which includes both users of the AI system and those who are the ‘target’ or ‘subject’ of its operations. Regardless of their interaction with the AI system, individuals may experience benefits or risks associated with its use.

- Organizations: These are formal organizations (i.e corporations, government agencies, non-profits) that may develop, deploy, or otherwise be affected by an AI system.

- Society: Society represents large groups of people, communities, or even societal norms, collective well-being, and the overall social fabric. AI systems can influence society positively or negatively, shaping social dynamics, cultural values, and public policies without direct individual engagement.

However, those broader categories have specific groups or communities that are relevant to the development and deployment of AI systems. For instance, people-specific stakeholders may include job applicants, renters, or students. Organization stakeholders may include C-suite executives, regulators, or trade associations. Society stakeholders could include protected classes of people or vulnerable populations, such as minors. Conversations to identify stakeholders should use the broader categories of stakeholders as an initial framework to begin identifying specific stakeholders based on the organization’s industry and AI usage.

Why are AI stakeholders important to organizations?

Organizations should understand why stakeholders are important to their overall AI governance and risk management process. Frameworks and regulations that are relevant to AI stakeholders include:

- OECD: Principle 1.5 on accountability suggests engaging with stakeholders through an AI system’s life cycle to address and mitigate risks.

- Hiroshima Process: As part of identifying and mitigating incidents, organizations should share and collaborate with diverse sets of stakeholders.

- ISO/IEC 42001: Under Section 6, organizations should conduct an impact assessment to determine an AI system’s foreseeable misuse on individuals or groups of individuals.

- EU AI Act: Article 27 requires high-risk AI system deployers to conduct an impact assessment that includes identifying specific individuals or groups that may be affected by the systems.

- Colorado’s SB 205: High-risk AI system deployers are required to complete an impact assessment that implicates identifying individuals that may be harmed by the systems.

- NIST AI RMF: Under Govern 4.2 organizations should document the risks and impacts of AI systems, which includes engaging stakeholders through an impact assessment.

However, the existing body of rules leave much of the stakeholder identification process to the complying organization.

How do organizations identify specific AI stakeholders?

The lack of regulatory guidance raises questions over defining the scope of stakeholders impacted by an organization’s AI systems. The Trustible AI Stakeholder taxonomy is intended to address this concern by providing stakeholder groups overviews and commonly associated risks to those groups. We leveraged research on which roles and groups were most appropriate to include, as well as considered common AI use cases and high-risks systems as a basis for mapping impacted communities.

In order to effectively use the stakeholder taxonomy, organizations should identify the universe of deployed AI systems, and should include external and internal AI systems. An organization’s internal AI systems can have far-reaching impacts and should be treated as in scope when appropriate. Once the scope of AI systems is determined, the organization should triage AI systems based on their risk levels. While AI systems generally will have impacted stakeholders, an organization should prioritize those systems that pose a higher risk of harm.

Once the organization establishes the scope of AI systems to assess, it is important to determine the context for which each system will be deployed and its purpose. Each system may have a unique deployment context and purpose that may affect who is impacted. Documenting these two features of an AI system will provide the basis for identifying the impacted stakeholders. When assessing the stakeholders for each system, organizations should consider the stakeholders who are internal to the organization (e.g., senior leaders, technical employees, and non-technical employees), as well as external. Organizations should also think about who are the impacted stakeholders (i.e., those who are affected by the consequences of the AI system) and impact mitigating stakeholders (i.e., those who manage and mitigate the impacts of AI systems).

How can organizations engage with AI stakeholders?

Beyond identifying stakeholders, organizations must also think about how to engage with stakeholders. This is especially important for those stakeholders who are external to the organization. Stakeholder engagement will vary depending on the availability of resources, as well as the scale and size of the organization.

To learn more about Trustible’s AI Stakeholder taxonomy and our Responsible AI Governance Platform, click here.