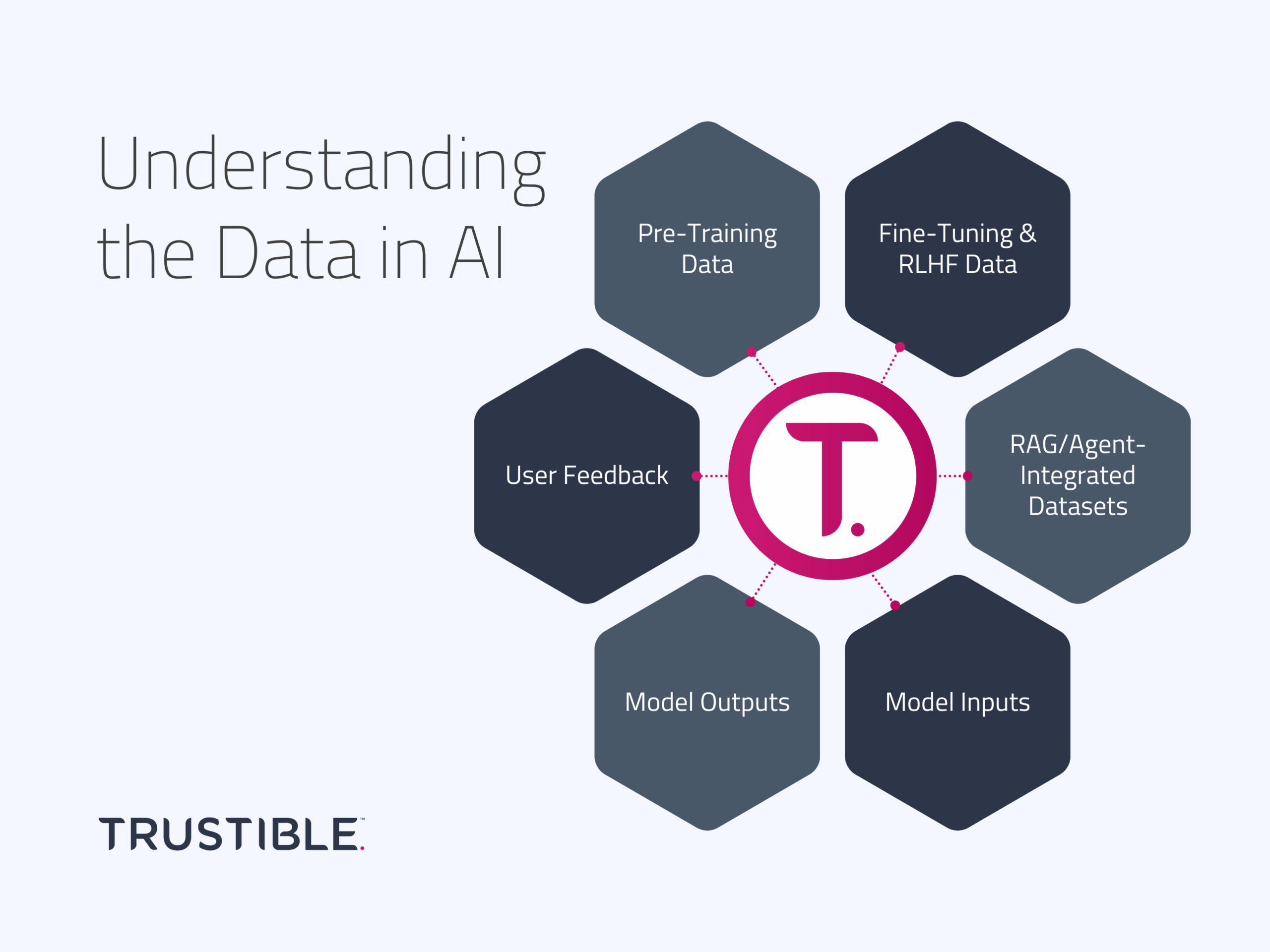

Data governance is a key component of responsible AI governance, and it features prominently in every emerging AI regulations and standards. However, “data” is not a monolithic concept within AI systems. From the massive datasets collected for training large language models (LLMs), to user feedback loops that refine and improve outputs, multiple “data streams” flow through any modern AI application.

Many organizations assume that if they rely primarily on third-party AI models, they may be exempt from extensive data governance responsibilities. However, even model deployers often must comply with data-related obligations—especially as AI regulations around transparency, privacy, and intellectual property continue to evolve.

In this blog post, we’ll explore six key data categories that are relevant to AI systems:

- Pre-Training Data

- Fine-Tuning & RLHF Data

- RAG/Agent-Integrated Datasets

- Model Inputs

- Model Outputs

- User Feedback

We will outline the top governance concerns and key governance activities for each of these categories. Finally, we’ll conclude with how Trustible’s Responsible AI Governance Platform can support your organization in managing these challenges.

1. Model Pre-Training Datasets

Description

Model pre-training data is commonly what people think of when discussing AI “data.” It’s the broad corpus used to train an initial machine learning or large language models—often gathered through large-scale web scraping, internal data, licensing agreements with data brokers or publishers, or a mix of all three. Traditional classification systems often rely on structured and labeled data, while LLMs tend to leverage massive, unstructured datasets from publicly available sources.

Governance Concerns

- Quality & Scope: Poor-quality pre-training data can lead to erratic model behavior. Overly broad web scraping may yield noisy or biased data, while narrow or outdated datasets can limit a model’s applicability in certain domains (e.g., finance, healthcare, or law).

- Privacy & Data Leaks: Unintentionally including personal information can lead to privacy risks. Sensitive user data can be difficult to detect and scrub from a large pre-training corpus, leaving the model vulnerable to data extraction attacks.

- Intellectual Property: Incorporating copyrighted material (intentionally or unintentionally) can lead to legal complications, especially as courts and regulators become increasingly attentive to AI’s use of protected content.

Governance Activities

- Evaluate Documentation: Under regulations like the EU AI Act and California’s upcoming Training Data Transparency Law, model developers must disclose details about dataset sources, collection methods, and quality-assurance practices. Organizations should request and review these “Datasheets” (or equivalent disclosures) from third-party model providers to ensure alignment with their risk posture.

- Assess Fit-for-Purpose: If you are using a pre-trained model from a vendor, evaluate whether its data collection period, sources, and content coverage are suitable for your domain.

- Monitor IP Risks: Stay abreast of evolving legal precedents around AI and intellectual property. Consider the potential for downstream copyright claims if your chosen model relied on questionable training data.

2. Fine-Tuning & RLHF Data

Description

Fine-tuning and Reinforcement Learning from Human Feedback (RLHF) data are used to adapt or improve a pre-trained model to a specific domain or task. This stage often involves introducing specialized training sets, annotated guidelines, or human feedback loops to refine model outputs. This data cannot be easily collected from the web, but rather is often generated through human crowdsourcing.

Governance Concerns

- Safety & Quality: Fine-tuning is where many safety guardrails and domain-specific rules are integrated. If the fine-tuned data is of poor quality or biased, it can undermine or negate the original model’s safeguards.

- Ethical Sourcing of Feedback: RLHF often relies on human-annotated datasets. There are questions about working conditions and fair compensation if large-scale annotation tasks are outsourced.

- Proprietary or Sensitive Data Usage: Incorporating confidential corporate data or personal information into the fine-tuning stage can create data leakage risks or violate internal data-handling policies.

Governance Activities

- Data Quality & Legal Use: Verify that any proprietary or sensitive data used in fine-tuning can lawfully be used to develop AI models. Evaluate data cleanliness and relevance.

- Ethical Oversight: When using human-annotated data, ensure that labor practices meet organizational and societal ethical standards. Review the potential ethical impact of tasks assigned to data labelers.

- Validation & Benchmarking: Create benchmarks or technical evaluations to gauge how fine-tuning impacts model performance, bias, or safety guardrails.

- Access Controls: Restrict who can view or manipulate the data (and resulting models). Use robust authentication and authorization protocols.

3. RAG/Agent-Integrated Datasets

Description

Retrieval-Augmented Generation (RAG) and AI agents often rely on external knowledge bases or vector databases to retrieve context, enabling more accurate or domain-specific responses. These external data repositories, which may include proprietary documents or other structured/unstructured data sources, are critical for specialized AI applications (e.g., customer service bots or internal knowledge management tools).

Governance Concerns

- Data Quality & Completeness: If the vector database or knowledge base is outdated, inaccurate, or incomplete, the AI system’s outputs will reflect these deficiencies.

- Data Privacy: Storing sensitive internal documents or user data in a vector database can introduce privacy and security risks.

- IP & Licensing: Organizations need to ensure they have the rights to store and use the content in these repositories for AI model reference.

- Traceability & Compliance: Many regulations require traceability of data usage and changes. Auditing how data enters, updates, or exits a vector database can be non-trivial.

Governance Activities

- Regular Data Audits: Periodically verify the accuracy, relevance, and licensing status of content in the vector database.

- Access & Retention Policies: Implement strict access controls, and define retention and deletion policies that comply with data protection regulations.

- Metadata & Provenance Tracking: Document the source and ownership status of each data entry. This is crucial for compliance and potential audits.

- Quality Assurance: Employ versioning and monitoring to ensure stale or corrupted data is removed or updated promptly.

4. Model Inputs

Description

Model inputs encompass all the user-provided prompts, queries, or other data that feed into your AI system in real time. These inputs might include chat messages, uploaded documents, or structured form data. This dataset also contains all the associated metadata with incoming requests, which may include user ids, IP addresses, and the user’s past chat histories or personalized context information.

Governance Concerns

- Privacy: Users may inadvertently provide personal identifiers, confidential business information, or other sensitive data. Storing or processing these inputs without adequate controls can pose severe privacy risks.

- Security: Many AI systems are highly vulnerable to security exploits that can be delivered directly through the model’s inputs, such as prompt injections. These can be difficult to detect automatically, and may be dangerous to use as further training examples if they aren’t identified as malicious.

- Unintentional Training: Inputs can sometimes leak into future model iterations if the system is continuously learning. This could expose sensitive user data in subsequent outputs.

- Regulatory Compliance: Certain jurisdictions require consent or disclaimers before collecting or processing user data with AI tools.

Governance Activities

- Data Classification & Consent: Clearly label input data according to sensitivity (e.g., PII vs. non-PII) and secure user consent where needed.

- Retention & Deletion Policies: Determine how long user inputs are stored. Ensure you have processes to securely delete data upon request or after a set period.

- Policy Disclosures: Be transparent with users about how their data might be used or retained, especially if data could be leveraged for model improvement.

5. Model Outputs

Description

Model outputs include everything a model generates in response to user prompts/queries—from simple text responses to complex visual or multimodal content. These outputs may also be stored, audited, or further processed by downstream systems.

Governance Concerns

- Accuracy & Misuse: Inaccurate, misleading, or harmful outputs can pose reputational and compliance risks. Organizations are increasingly expected to manage hallucinations or bias in AI-generated content.

- Copyright & IP: Generated text may inadvertently contain copyrighted material from the training data. This risk is heightened when the AI system’s outputs are used commercially or shared publicly.

- Content Moderation: Outputs can sometimes be offensive, discriminatory, or otherwise inappropriate, creating potential legal and ethical liabilities.

Governance Activities

- Output Monitoring & Filtering: Implement real-time or retrospective checks to identify harmful, illegal, or high-risk content.

- Transparency Measures: Label AI-generated outputs as such, especially under regulations that require disclosure (e.g., the EU’s proposed AI labeling requirements).

- Compliance Policies: Maintain policies around who can access, archive, or repurpose model outputs. Create an audit trail for high-risk or regulated use cases.

6. User Feedback

Description

User feedback includes error incident reports, model overrides, corrections, or any other signals users provide to help refine or improve the system. Feedback can be used to adjust model parameters, guide fine-tuning, or inform future product updates.

Governance Concerns

- Privacy & Data Minimization: Feedback may inadvertently contain personal or sensitive details, so it should be handled with the same rigor as other user data.

- Bias Amplification: If user feedback primarily comes from a certain demographic or use case, it could inadvertently skew the model’s performance or ethical stance.

- Data Quality & Reliability: Not all feedback is equally valid or representative. Low-quality or maliciously misleading feedback can degrade model performance.

Governance Activities

- Feedback Workflow: Establish clear processes to review and categorize user feedback. Decide which inputs should directly influence the model and which require human oversight.

- Anonymization & Aggregation: Aggregate feedback to protect individual privacy while still learning from overall user trends.

- Ongoing Ethical Review: Evaluate if feedback channels and policies promote fairness, inclusivity, and respect for user rights.

How Trustible Helps

Trustible’s Responsible AI Governance software platform empowers organizations to comprehensively manage the data and governance challenges outlined above. Our platform provides:

- AI Data DocumentationDocument data documentation used in your AI use cases—pre-training, fine-tuning, RAG/agent sources, model inputs, outputs, and user feedback—in one centralized system.

- Regulatory & Policy ManagementStay ahead of rapidly evolving AI regulations. Trustible’s platform includes built-in compliance frameworks (e.g., EU AI Act, emerging U.S. state laws), enabling you to map governance activities to specific legal or policy requirements.

- Risk Assessment & MitigationUse Trustible’s risk management tools to identify, score, and mitigate potential data governance risks.

- Continuous Review & ReportingCreate continuous review cadences and reports to regularly review governance activities, model performance, data attributes, and compliance..

Conclusion

Now that we’ve explored each of the six AI data categories—from massive pre-training datasets to everyday user feedback—you should have a clearer view of the many moving parts that go into building and maintaining an AI system. Each category comes with its own set of governance challenges, whether it’s ensuring privacy, upholding intellectual property rights, or keeping bias in check. But addressing these issues head-on across the entire AI data lifecycle can help you reduce risk, improve system performance, and boost trust.

Ready to learn more about how we can support your AI governance journey? Get in touch with us.