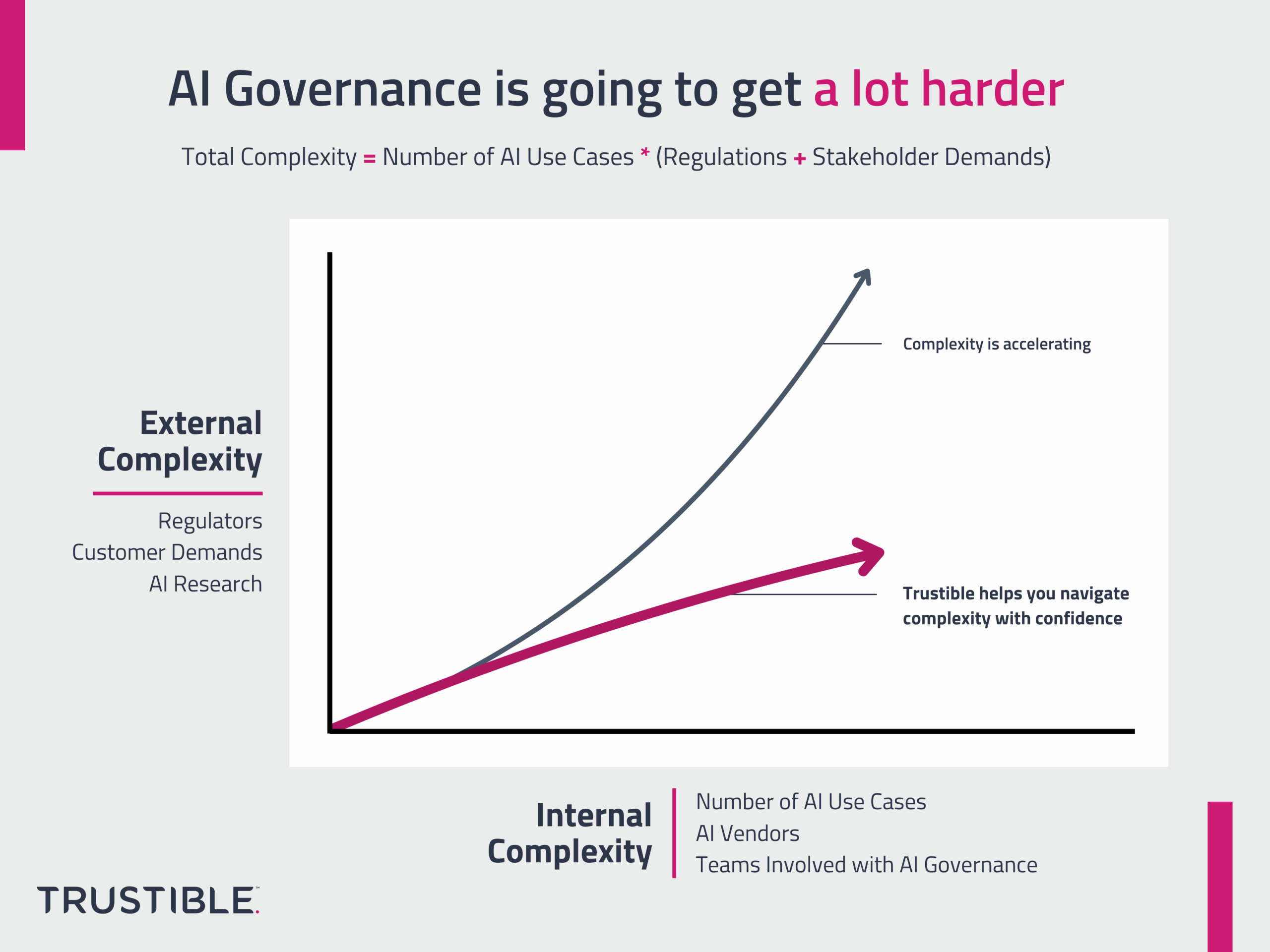

AI Governance is hard as it involves collaboration across multiple teams and an understanding of a highly complex technology and its supply chains. It’s about to get even harder.

The complexity of AI governance is growing along 2 different dimensions at the same time – both of them are poised to accelerate in the coming months. One dimension is the internal complexity an organization faces with its AI systems, as measured by the number of AI models, tools, and use cases it has deployed. The other is the external complexity it faces which is proportional to customer, regulators, and public demands about their AI systems.

Internal Complexity

The internal complexity of AI governance is proportional to the number of internal AI systems that need to be governed. This includes every AI model developed, or fine tuned internally for commercial purposes as part of products and services, in addition to all the AI tools that may be purchased for internal use. The number of AI use cases within organizations is poised to grow as world class AI models have become remarkably accessible to businesses of all sizes.

In addition to simply the number of systems deployed within an organization, each system grows in complexity over time. Models are regularly updated to account for model drift, to incorporate new data, or to take advantage of the newest model developments. However, each subsequent model version introduces additional complexity with requirements to document changes, re-evaluate models for performance, and track multiple large ML artifacts. Furthermore, many regulations require documentation of all inputs to and outputs from an ML model and this data produced by this must be appropriately governed.

The internal complexity for organizations is poised to grow rapidly as many existing vendors are quickly introducing AI features into already procured systems, and general purpose AI models have dramatically lowered the barriers to entry of new AI applications. This creates an enormous internal surface area for organizations to oversee and market pressures are pushing orgs to adopt AI at an aggressive pace increasing the growth of internal complexity. Furthermore, many employees have started leveraging unapproved AI tools for their own personal workloads (‘Shadow AI’), which introduces legal, data privacy, and cybersecurity risks. AI governance, as we can see, is not just about the models.

Finally, the number of internal stakeholders who need to get involved with internal AI governance is increasing. AI is no longer the exclusive domain of AI/ML teams. Key decisions about AI systems will need further inputs from subject matter experts, business leaders, and customer facing teams responding to customer inquiries about AI systems. Each of these teams may have valid reasons for getting more involved in the AI development process, but this inevitably leads to increased organizational friction. Similarly, a wider number of teams, with differing levels of technical aptitude will start using AI tools, creating a wider surface area for potential AI challenges.

External Complexity

The external complexity of AI governance represents all of the uncontrollable external factors that are relevant to AI governance. This includes customers’ demands about AI products, the growing set of regulatory requirements, and the growing corpus of relevant AI risk research.

The first source of this complexity is customer demands about AI systems. Many businesses have started to incorporate AI governance concerns into their vendor due diligence processes. Customer concerns can range from ensuring an AI product is appropriate for their use, reliable and safe, and that it will be governed responsible. Even companies that do not directly sell AI products or services may face similar public reputational demands related to their use of AI; this has already manifested in the entertainment industry with the first labor strikes involving AI demands.

Many countries and regions around the world are enacting AI regulations, such as the EU AI Act. This regulatory environment is likely to be highly complex due to varying views on ethics, legal traditions, and government incentives. In addition to AI focused regulations, many existing laws apply to AI driven systems, and guidelines for compliance with those laws are likely to emerge in the following years. Similarly, sector or use case specific laws will likely continue to emerge as the risks of AI are better understood.

Staying on top of all the research about AI risks, harms, and benefits is a challenging task. AI governance research is a relatively new field, and essential information is produced on an almost daily basis. AI involves a complex supply chain of data providers, model developers, deployment platforms, and layers of fine-tuning, and new vulnerabilities, exploits, or risks could be discovered from any layer at any time and need to be addressed.

All of these factors – customer, regulatory, and research – are poised to rapidly grow and as companies learn what to ask in vendor diligence, new laws are passed to regulate AI and the public learns more about the ethical implications of these systems. No amount of internal controls, policies or processes can impact this external complexity growth which represents an organizational risk.

Compounding Complexity

The key challenge with AI governance is that the internal and external complexities will get multiplied together. For every new AI product feature, there may be a growing set of regulatory requirements. Similarly, for every newly discovered AI vulnerability, there may be dozens of internal tools affected by it that need to be updated. Teams may be well equipped to navigate the growing internal complexity, but the uncertain and rapid growth of the external complexity is something many businesses are unprepared for.

How does Trustible help?

Trustible fundamentally is a software tool intended to help organizations scale their AI governance functions to account for the rapidly growing external complexity. Trustible assists organizations to document their internal AI use cases and products to ensure they can accurately and efficiently respond to customer demands. Trustible directly incorporates the requirements of emerging AI regulations and frameworks and maps them to prior work done, allowing a ‘one-to-many’ compliance effort. Trustible helps build public trust by facilitating the creation of public transparency documents, and verifying compliance with responsible AI frameworks such as NIST AI RMF or ISO 42001. Finally, Trustible provides real time insights about AI governance such as risk recommendations, mitigation strategies, and ratings of common AI models and vendors to help organizations stay on top of a rapidly evolving ecosystem.