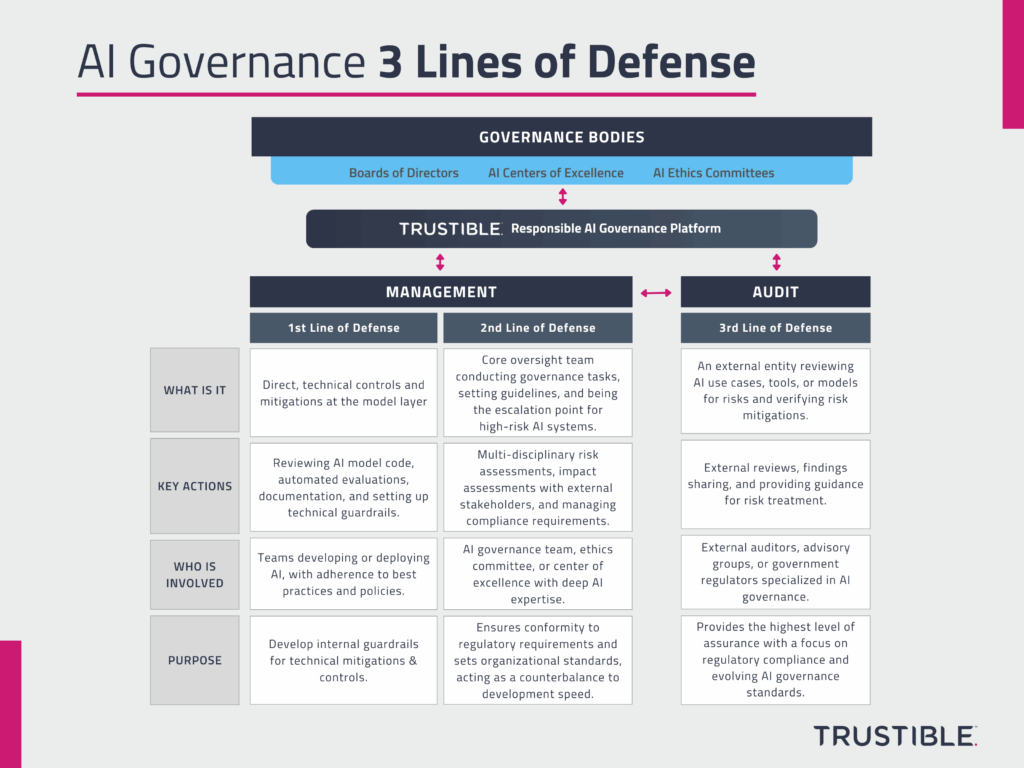

AI Governance is a complex task as it involves multiple teams across an organization, working to understand and evaluate the risks of dozens of AI use cases, and managing highly complex models with deep supply chains. On top of the organizational and technical complexity, AI can be used for a wide range of purposes, some of which are relatively safe (e.g. email spam filter), while others pose serious risks (e.g. medical recommendation system). Organizations want to be responsible with their AI use, but struggle to balance innovation and adoption of AI for low risk uses, with oversight and risk management for high risk uses. To manage this, organizations need to adopt a multi-tiered governance approach in order to allow for easy, safe experimentation from development teams, with clear escalation points for riskier uses.