On July 24, 2025, the California Privacy Protection Agency (CPPA) voted unanimously to finalize rules under the California Consumer Privacy Act (CCPA), as amended by the California Privacy Rights Act. These new rules introduce significant obligations for businesses subject to the CCPA. They impose requirements on the use of automated decision-making technologies (ADMT) and mandate cybersecurity audits and risk assessments. The California Office of Administrative Law must review and approve these rules by around September 5, 2025. Once approved, they will become effective on a phased basis between 2027 and 2030.

3 Types of Risk & Impact Assessments, And When to Use Them

A common requirement in many AI standards, regulations, and best practices is to conduct risk and impact assessments to understand the potential ways AI could malfunction or be misused. By understanding the risks, organizations can prioritize and implement appropriate technical, organizational, and legal mitigation measures. While there are standards for these assessments, such as the […]

What the Trump Administration’s AI Action Plan Means for Enterprises

The Trump Administration released “Winning the AI Race: America’s AI Action Plan” (AI Action Plan) on July 23, 2025. The AI Action plan was published in accordance with the January 2025 Removing Barriers to American Leadership in AI Executive Order. The AI Action Plan proposes approximately 90 policy recommendations within three thematic pillars: Pillar I addresses […]

FAccT Finding: AI Takeaways from ACM FAccT 2025

Anastassia Kornilova is the Director of Machine Learning at Trustible. Anastassia translates research into actionable insights and uses AI to accelerate compliance with regulations. Her notable projects have involved creating the Trustible Model Ratings and AI Policy Analyzer. Previously, she has worked at Snorkel AI developing large-scale machine learning systems, and at FiscalNote developing NLP […]

Navigating The AI Regulatory Minefield: State And Local Themes From Recent Legislation

This article was originally published on Forbes. Click here for the original version. The complex regulatory landscape for artificial intelligence (AI) has become a pressing challenge for businesses. Governments are approaching AI through the same piecemeal lens as other emerging technologies such as autonomous vehicles, ride-sharing, and even data privacy. In the absence of a […]

Trustible Becomes Official Implementation Partner for the Databricks AI Governance Framework (DAGF)

Despite the explosive growth of AI, most enterprises remain unprepared to manage the very real risks that come with its adoption. While the opportunities are vast—from smarter products to more efficient operations—the path to realizing AI’s full potential is fraught with challenges around performance, cybersecurity, privacy, ethics, and legal compliance. Without a strong AI governance […]

Trustible’s Perspective: The AI Moratorium would have been bad for AI adoption

In the early hours of July 1, 2025, the Senate overwhelmingly voted to strip the proposed federal moratorium on state and local AI laws from the Republican’s reconciliation bill. The moratorium went through several re-writes in an attempt to salvage it, though ultimately 99 Senators supported removing it from the final legislative package. While the political […]

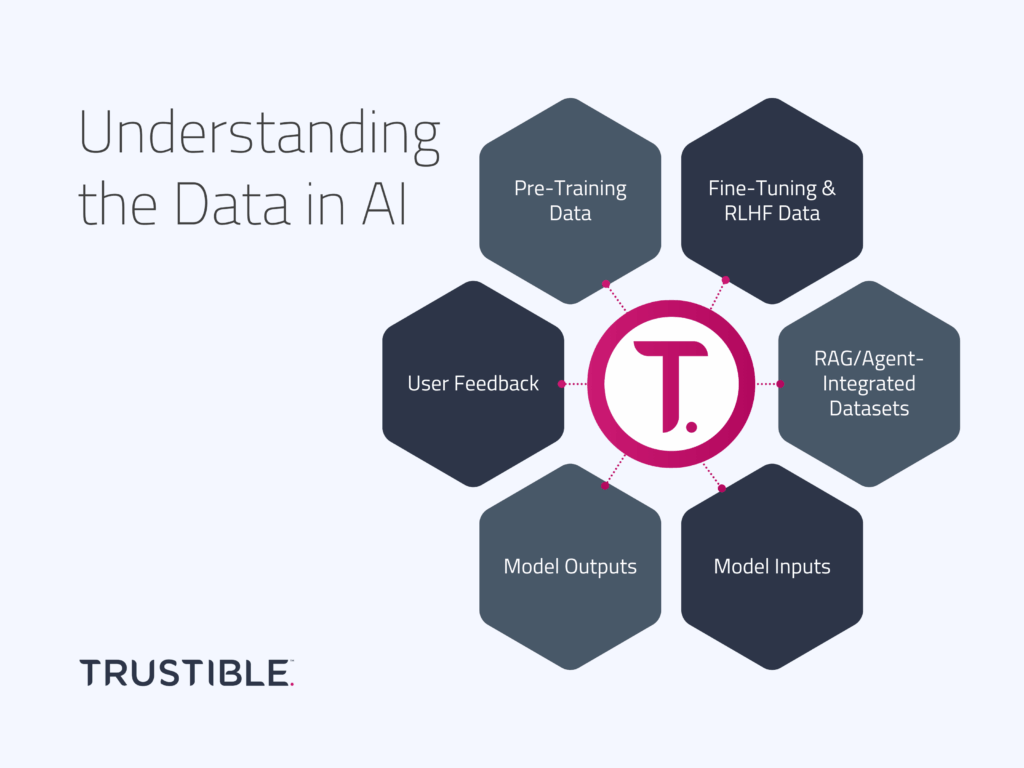

Understanding the Data in AI

Data governance is a key component of responsible AI governance, and it features prominently in every emerging AI regulations and standards. However, “data” is not a monolithic concept within AI systems. From the massive datasets collected for training large language models (LLMs), to user feedback loops that refine and improve outputs, multiple “data streams” flow through any modern AI application.

Navigating AI Vendor Risk: 10 Questions for your Vendor Due Diligence Process

AI is everywhere, but the race to add AI from vendors has embedded unknown risks into your supply chain. Knowing what type of AI your suppliers use is difficult enough, let alone knowing how to ensure your due diligence adequately addresses the unique risks it may pose. Yet, customers and regulators are increasingly probing into […]

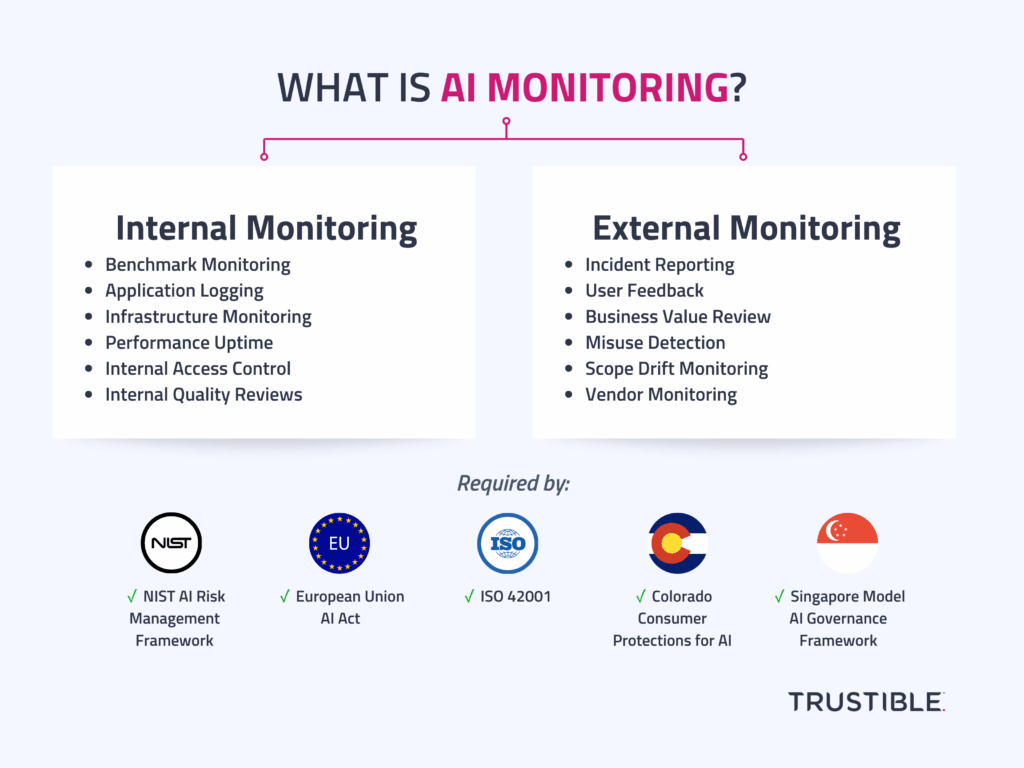

What is AI Monitoring?

When many technical personas hear the term monitoring, they often think of internal monitoring of the AI system.