New alliance connects technology, services, and channels to embed governance across the AI lifecycle, accelerating safe adoption for organizations Today, I’m excited to announce the global launch of the Trustible Partner Program. This ecosystem is purpose-built to weave AI governance through every stage of the AI lifecycle. Our program unites technology alliances, system integrators, resellers, […]

What the Trump Administration’s AI Action Plan Means for Enterprises

The Trump Administration released “Winning the AI Race: America’s AI Action Plan” (AI Action Plan) on July 23, 2025. The AI Action plan was published in accordance with the January 2025 Removing Barriers to American Leadership in AI Executive Order. The AI Action Plan proposes approximately 90 policy recommendations within three thematic pillars: Pillar I addresses […]

FAccT Finding: AI Takeaways from ACM FAccT 2025

Anastassia Kornilova is the Director of Machine Learning at Trustible. Anastassia translates research into actionable insights and uses AI to accelerate compliance with regulations. Her notable projects have involved creating the Trustible Model Ratings and AI Policy Analyzer. Previously, she has worked at Snorkel AI developing large-scale machine learning systems, and at FiscalNote developing NLP […]

Trustible Becomes Official Implementation Partner for the Databricks AI Governance Framework (DAGF)

Despite the explosive growth of AI, most enterprises remain unprepared to manage the very real risks that come with its adoption. While the opportunities are vast—from smarter products to more efficient operations—the path to realizing AI’s full potential is fraught with challenges around performance, cybersecurity, privacy, ethics, and legal compliance. Without a strong AI governance […]

Trustible’s Perspective: The AI Moratorium would have been bad for AI adoption

In the early hours of July 1, 2025, the Senate overwhelmingly voted to strip the proposed federal moratorium on state and local AI laws from the Republican’s reconciliation bill. The moratorium went through several re-writes in an attempt to salvage it, though ultimately 99 Senators supported removing it from the final legislative package. While the political […]

Introducing Trustible’s New US Insurance AI Framework: Simplifying AI Compliance for Insurers

At Trustible, we understand the challenges insurers face in navigating the evolving AI regulatory landscape, particularly at the state-level in the U.S. That’s why we’re excited to introduce the Trustible US Insurance AI Framework, designed to streamline compliance by synthesizing the latest insurance AI regulations into one comprehensive, easy-to-comply with framework embedded in our platform.

Everything you need to know about the NY DFS Insurance Circular Letter No. 7

On July 11, 2024, the New York Department of Financial Services (NY DFS) released its final circular letter on the use of external consumer data and information sources (ECDIS), AI systems, and other predictive models in underwriting and pricing insurance policies and annuity contracts. A circular letter is not a regulation per se, but rather a formalized interpretation of existing laws and regulations by the NY DFS. The finalized guidance comes after the NY DFS sought input on its proposed circular letter, which was published in January 2024.

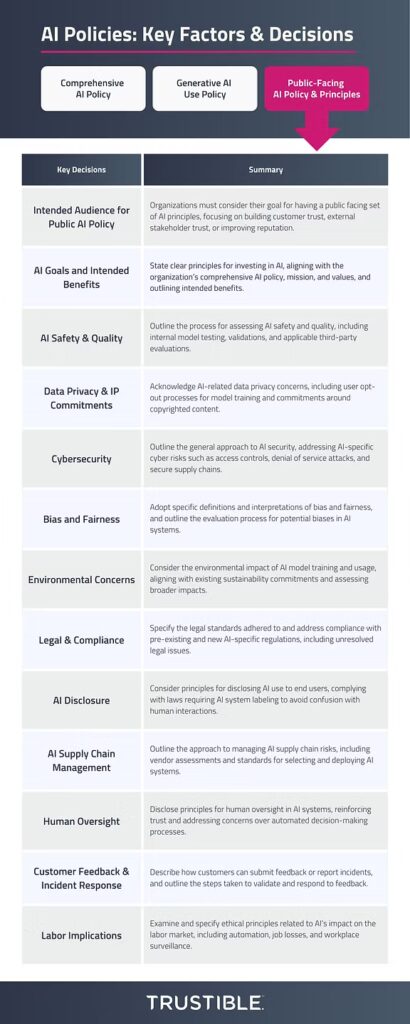

AI Policy Series 3: Drafting Your Public AI Principles Policy

In our final blog post of this AI Policy series (see Comprehensive AI Policy and AI Use Policy guidance posts here), we want to explore what organizations should make available to the public about their use of AI. According to recent research by Pew, 52 percent of Americans feel more concerned than excited by AI. This data demonstrates that, while organizations may understand or realize the value of AI, their users and customers may harbor some skepticism. Policymakers and large AI companies have sought to address public concerns, albeit in their own ways.

AI Policy Series 2: Drafting Your AI Use Policy

In this series’ first blog post, we broke down AI policies into 3 categories: 1) a comprehensive organizational AI policy that includes organizational principles, roles and processes, 2) an AI use policy that outlines what kinds of tools and use cases are allowed, as well as what precautions employees must take when using them, and 3) a public facing AI policy that outlines core ethical principles the organization adopts, as well as their stance on key AI policy stances. In this second blog post on AI policies, we want to explore critical decisions and factors that organizations should consider as they draft their AI use policy.

AI Policy Series 1: Drafting Your Comprehensive AI Policy

As organizations increase their adoption of AI, governance leaders are looking to put in place policies that ensure their AI deployment aligns with their organization’s principles, complies with regulatory standards, and mitigates potential risks. But where to start in developing your policies can oftentimes be overwhelming. Let’s start with some important context. AI Policies break […]