At Trustible, we believe AI can be a powerful force for good, but it must be governed effectively to align with public benefit. Introducing the Trustible AI Governance Insights Center, a public, open-source library designed to equip enterprises, policymakers, and consumers with essential knowledge and tools to navigate AI’s risks and benefits. Our comprehensive taxonomies cover AI Risks, Mitigations, Benefits, and Model Ratings, providing actionable insights that empower organizations to implement robust governance practices. Join us in transforming the conversation around trusted AI into tangible, measurable outcomes. Explore the Insights Center today!

Navigating AI Vendor Risk: 10 Questions for your Vendor Due Diligence Process

AI is everywhere, but the race to add AI from vendors has embedded unknown risks into your supply chain. Knowing what type of AI your suppliers use is difficult enough, let alone knowing how to ensure your due diligence adequately addresses the unique risks it may pose. Yet, customers and regulators are increasingly probing into […]

Trustible Announces New Model Transparency Ratings to Enhance AI Model Risk Evaluation

Organizational leaders are looking to better understand what AI models may be best fit for a given use case. However, limited public transparency on these systems makes this evaluation difficult.

In response to the rapid development and deployment of general-purpose AI (GPAI) models, Trustible is proud to introduce its research on Model Transparency Ratings – offering a comprehensive assessment of transparency disclosures of the top 21 Large Language Models (LLMs).

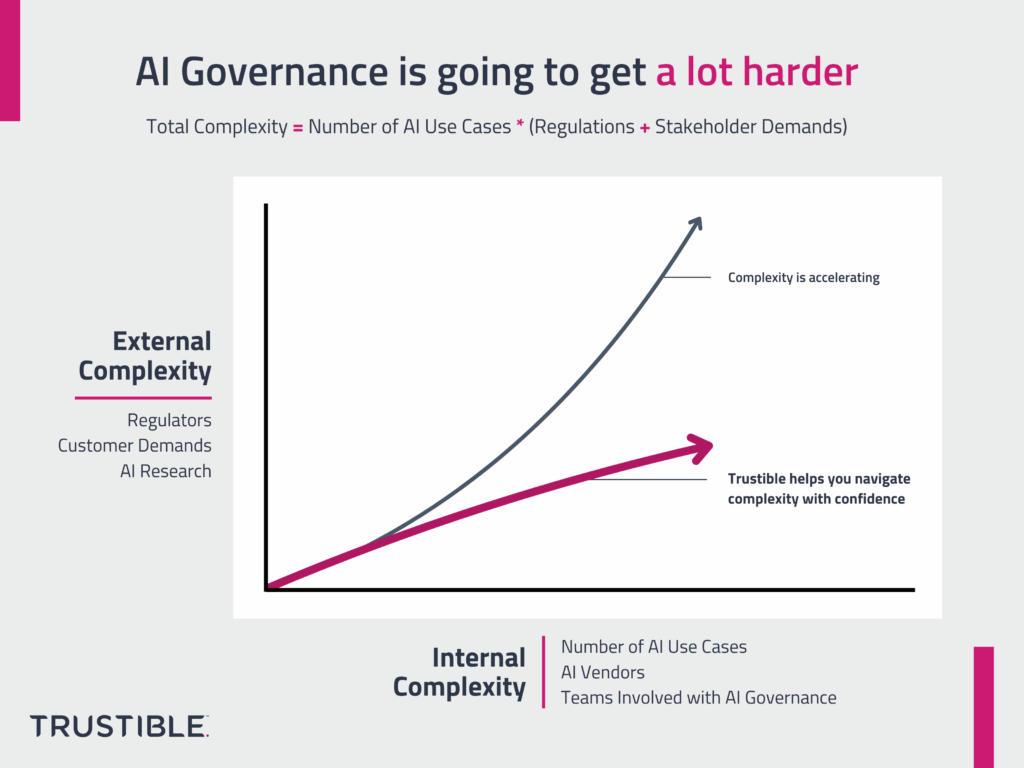

Why AI Governance is going to get a lot harder

AI Governance is hard as it involves collaboration across multiple teams and an understanding of a highly complex technology and its supply chains. It’s about to get even harder. The complexity of AI governance is growing along 2 different dimensions at the same time – both of them are poised to accelerate in the coming […]