New York became the second state last year to enact a frontier model disclosure law when Governor Kathy Hochul signed the Responsible AI Safety and Education (RAISE) Act. The new law requires frontier model providers to disclose certain safety processes for their models and report certain safety incidents to state regulators, with many similarities to California’s slate of AI laws passed last fall. The RAISE Act will take effect on January 1, 2027. This article covers who must comply with the RAISE Act, what transparency obligations the law creates, and how the law will be enforced.

Everything You Need to Know About the Executive Order on a National AI Policy Framework (2025)

On December 11, 2025, President Trump signed an Executive Order directing the federal government to build a “minimally burdensome” national framework for AI and to push back against state AI laws the Administration views as harmful to innovation. The EO takes a new, novel approach via Executive Branch authority, creating an AI Litigation Task Force and asking the U.S. Department of Commerce to evaluate state AI laws and identify “onerous” laws (explicitly citing laws that require models to “alter their truthful outputs”.)

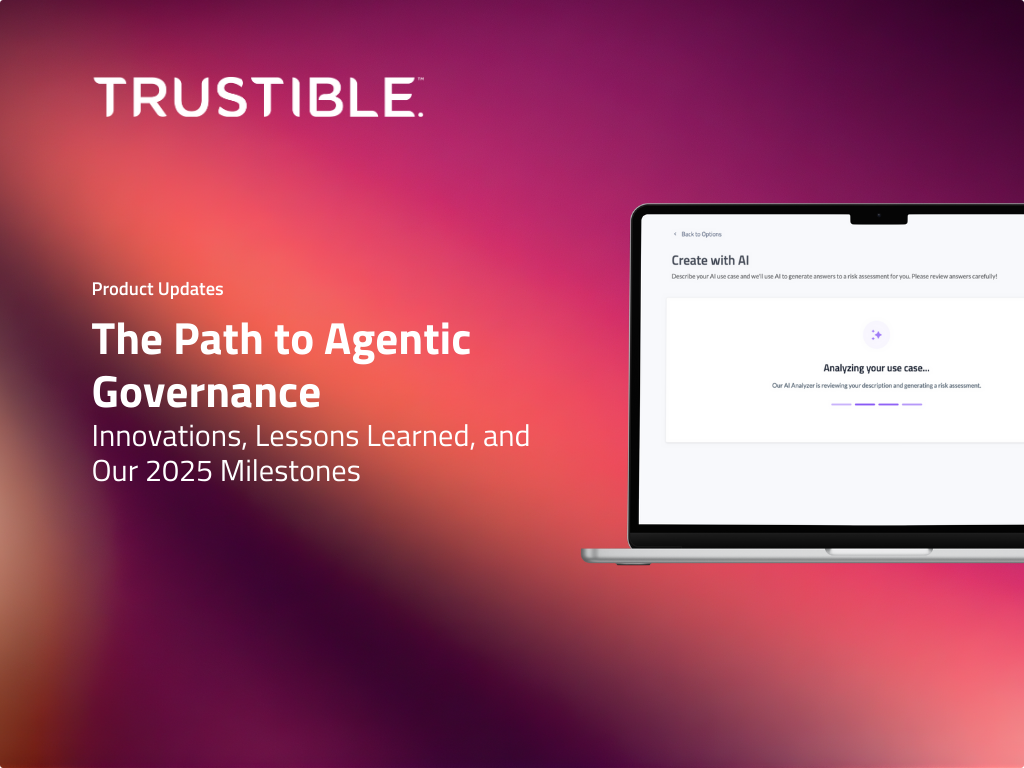

The Path to Agentic Governance: Innovations, Lessons Learned, and Our 2025 Milestones

In 2025, Trustible delivered the continuous, scalable programs needed for faster AI adoption at the same time that AI governance itself was shifting from principles and pilots to real production.

Our strengthened intelligence, collaboration, automation, and change management capabilities helped enterprises deploy AI deeper into workflows, decisions, and customer experiences.

5 AI Governance Trends Heading into 2026

AI has moved from experimental pilots to systems that shape real-world decisions, customer interactions, and mission outcomes. Organizations across sectors, including financial services, healthcare, insurance, retail, and the public sector, now depend on AI to run core operations and deliver better experiences. And their enthusiasm to adopt the technology responsibly is also growing.

Shadow AI: What It Is, Why It Matters, and What To Do About It

Shadow AI has climbed to the top of many security and governance risk concerns, and for good reason. But the phrase itself is slippery: different teams use it to mean different things, and the detection tools being marketed as ‘Shadow AI detectors’ often only catch a narrow slice of the problem. That mismatch creates confusion for security and compliance teams, and business leaders who only want one thing: reduce data leakage, regulatory exposure, and business risk without strangling the organization’s ability to innovate.

Healthcare Regulation of AI: A Comprehensive Overview

AI in healthcare isn’t starting from a regulatory vacuum. It’s starting from an environment that already treats digital tools as safety‑critical: medical device rules, clinical trial regulations, GxP controls, HIPAA and GDPR, and payer oversight all assume that failing systems can directly harm patients or distort evidence. That makes healthcare one of the few sectors where AI is being plugged into dense, pre‑existing regulatory schemas rather than waiting for AI‑specific laws to catch up.

Trustible Recognized in the 2025 Gartner® Market Guide for AI Governance Platforms

Trustible, a leading AI governance platform provider, is pleased to be listed as a Representative Vendor in the 2025 Gartner Market Guide for AI Governance Platforms. We believe this is a milestone that signals the start of an inflection point, when AI governance is no longer optional, experimental, or theoretical; it’s now a business imperative […]

AI Governance Best Practices for Healthcare Systems and Pharmaceutical Companies

In the rapidly evolving landscape of healthcare, AI promises to revolutionize patient care, but it also brings significant risks. From algorithmic bias to data privacy breaches, the stakes are high. Effective AI governance is essential to harness the benefits of these technologies while safeguarding patient safety and ensuring compliance with regulations. This article delves into the critical challenges healthcare systems and pharmaceutical companies face, offering practical solutions and best practices for implementing trustworthy AI. Discover how to navigate the complexities of AI in healthcare and protect your organization from potential pitfalls.

Introducing the Trustible AI Governance Insights Center

At Trustible, we believe AI can be a powerful force for good, but it must be governed effectively to align with public benefit. Introducing the Trustible AI Governance Insights Center, a public, open-source library designed to equip enterprises, policymakers, and consumers with essential knowledge and tools to navigate AI’s risks and benefits. Our comprehensive taxonomies cover AI Risks, Mitigations, Benefits, and Model Ratings, providing actionable insights that empower organizations to implement robust governance practices. Join us in transforming the conversation around trusted AI into tangible, measurable outcomes. Explore the Insights Center today!

Everything You Need to Know about California’s New AI Laws

The California legislature has concluded another AI-inspired legislative session, and Governor Gavin Newsom has signed (or vetoed) bills that will have new impacts on the AI ecosystem. By our analysis, California now leads U.S. states in rolling out the most comprehensive set of targeted AI regulations in the country – but now what? The dominant […]