New York became the second state last year to enact a frontier model disclosure law when Governor Kathy Hochul signed the Responsible AI Safety and Education (RAISE) Act. The new law requires frontier model providers to disclose certain safety processes for their models and report certain safety incidents to state regulators, with many similarities to California’s slate of AI laws passed last fall. The RAISE Act will take effect on January 1, 2027. This article covers who must comply with the RAISE Act, what transparency obligations the law creates, and how the law will be enforced.

Everything You Need to Know About the Executive Order on a National AI Policy Framework (2025)

On December 11, 2025, President Trump signed an Executive Order directing the federal government to build a “minimally burdensome” national framework for AI and to push back against state AI laws the Administration views as harmful to innovation. The EO takes a new, novel approach via Executive Branch authority, creating an AI Litigation Task Force and asking the U.S. Department of Commerce to evaluate state AI laws and identify “onerous” laws (explicitly citing laws that require models to “alter their truthful outputs”.)

5 AI Governance Trends Heading into 2026

AI has moved from experimental pilots to systems that shape real-world decisions, customer interactions, and mission outcomes. Organizations across sectors, including financial services, healthcare, insurance, retail, and the public sector, now depend on AI to run core operations and deliver better experiences. And their enthusiasm to adopt the technology responsibly is also growing.

Healthcare Regulation of AI: A Comprehensive Overview

AI in healthcare isn’t starting from a regulatory vacuum. It’s starting from an environment that already treats digital tools as safety‑critical: medical device rules, clinical trial regulations, GxP controls, HIPAA and GDPR, and payer oversight all assume that failing systems can directly harm patients or distort evidence. That makes healthcare one of the few sectors where AI is being plugged into dense, pre‑existing regulatory schemas rather than waiting for AI‑specific laws to catch up.

AI Governance Best Practices for Healthcare Systems and Pharmaceutical Companies

In the rapidly evolving landscape of healthcare, AI promises to revolutionize patient care, but it also brings significant risks. From algorithmic bias to data privacy breaches, the stakes are high. Effective AI governance is essential to harness the benefits of these technologies while safeguarding patient safety and ensuring compliance with regulations. This article delves into the critical challenges healthcare systems and pharmaceutical companies face, offering practical solutions and best practices for implementing trustworthy AI. Discover how to navigate the complexities of AI in healthcare and protect your organization from potential pitfalls.

Introducing the Trustible AI Governance Insights Center

At Trustible, we believe AI can be a powerful force for good, but it must be governed effectively to align with public benefit. Introducing the Trustible AI Governance Insights Center, a public, open-source library designed to equip enterprises, policymakers, and consumers with essential knowledge and tools to navigate AI’s risks and benefits. Our comprehensive taxonomies cover AI Risks, Mitigations, Benefits, and Model Ratings, providing actionable insights that empower organizations to implement robust governance practices. Join us in transforming the conversation around trusted AI into tangible, measurable outcomes. Explore the Insights Center today!

AI Governance Meets AI Insurance: How Trustible and Armilla Are Advancing AI Risk Management

As enterprises race to deploy AI across critical operations, especially in highly-regulated sectors like finance, healthcare, telecom, and manufacturing, they face a double-edged sword. AI promises unprecedented efficiency and insights, but it also introduces complex risks and uncertainties. Nearly 59% of large enterprises are already working with AI and planning to increase investment, yet only about 42% have actually deployed AI at scale. At the same time, incidents of AI failures and misuse are mounting; the Stanford AI Index noted a 26-fold increase in AI incidents since 2012, with over 140 AI-related lawsuits already pending in U.S. courts. These statistics underscore a growing reality: while AI’s presence in the enterprise is accelerating, so too are the risks and scrutiny around its use.

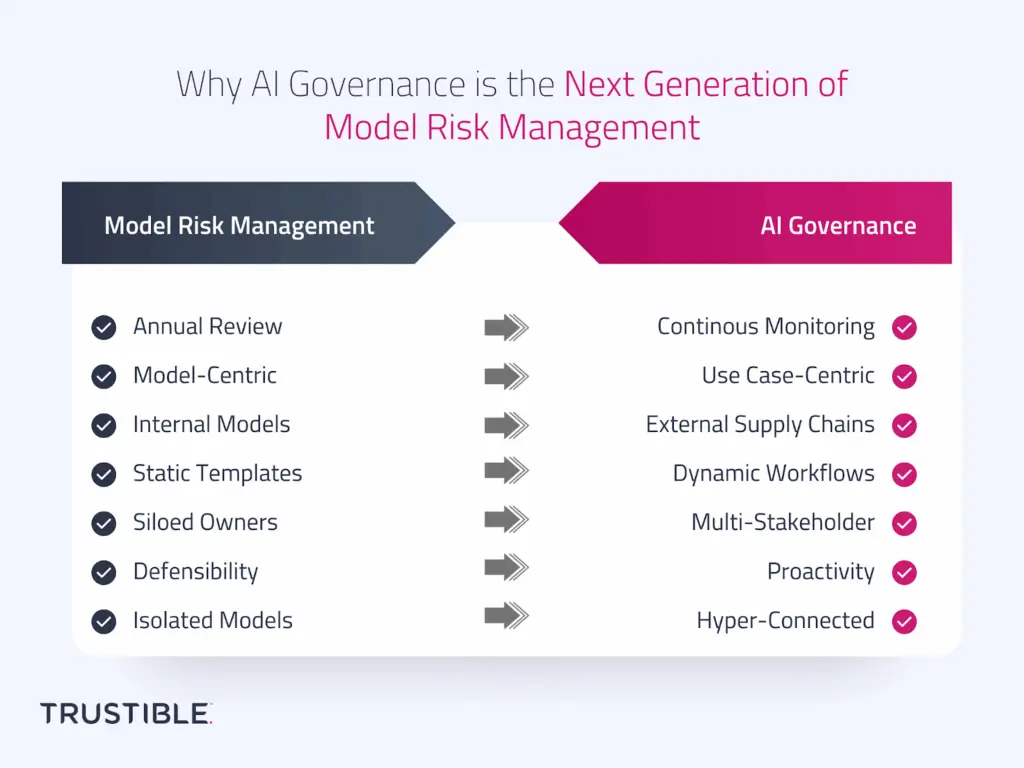

Why AI Governance is the Next Generation of Model Risk Management

For decades, Model Risk Management (MRM) has been a cornerstone of financial services risk practices. In banking and insurance, model risk frameworks were designed to control the risks of internally built, rule-based, or statistical models such as credit risk models, actuarial pricing models, or stress testing frameworks. These practices have served regulators and institutions well, providing structured processes for validation, monitoring, and documentation.

Should the EU “Stop the Clock” on the AI Act?

The European Union (EU) AI Act became effective in August 2024, after years of negotiations (and some drama). Since entering into force, the AI Act’s implementation has been somewhat bumpy. The initial set of obligations for general-purpose AI (GPAI) providers took effect in August 2025 but the voluntary Code of Practice faced multiple drafting delays. The finalized version was released with less than a month to go before GPAI providers needed to comply with the law.

What the Trump Administration’s AI Action Plan Means for Enterprises

The Trump Administration released “Winning the AI Race: America’s AI Action Plan” (AI Action Plan) on July 23, 2025. The AI Action plan was published in accordance with the January 2025 Removing Barriers to American Leadership in AI Executive Order. The AI Action Plan proposes approximately 90 policy recommendations within three thematic pillars: Pillar I addresses […]