What is Colorado Regulation-10-1-1 ? In July 2021, Governor Jared Polis signed SB 21-169 into law, which directed the Colorado Division of Insurance (CO DOI) to adopt risk management requirements that prevent algorithmic discrimination in the insurance industry. After two years and several revisions, a final risk management regulation for life insurance providers was officially […]

Everything you need to know about the NIST AI Risk Management Framework

What is the NIST AI RMF? The National Institute of Standards and Technology (NIST) Artificial Intelligence Risk Management Framework is a voluntary framework released in 2023 that helps organizations identify and manage the risks associated with development and deployment of Artificial Intelligence. It is similar in its intent and structure to the NIST Cybersecurity Framework […]

Privacy Pioneers: AI as the New Frontier

In our new research paper, we’ll discuss how privacy professionals, and their organizations, can take on AI governance — and what will happen if they don’t. Key findings include:

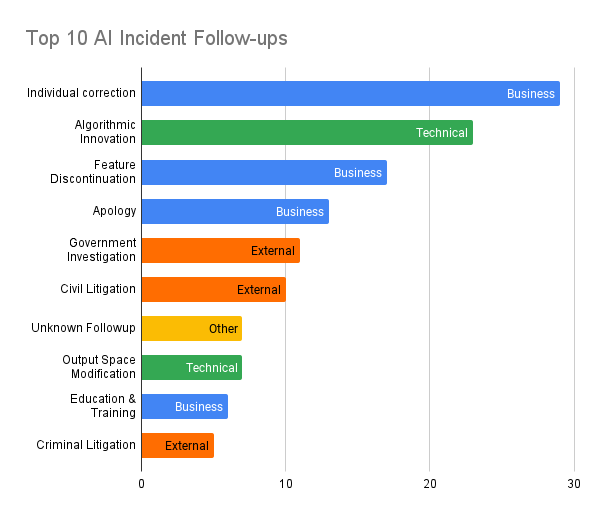

What to do when AI goes wrong?

AI systems have immense beneficial applications, however they also carry significant risks. Research into these risks, and the broader field of AI safety, hasn’t received nearly as much attention or investment until recently. For the longest time, there were no reliable sources of information about adverse events caused by AI systems for researchers to study. […]

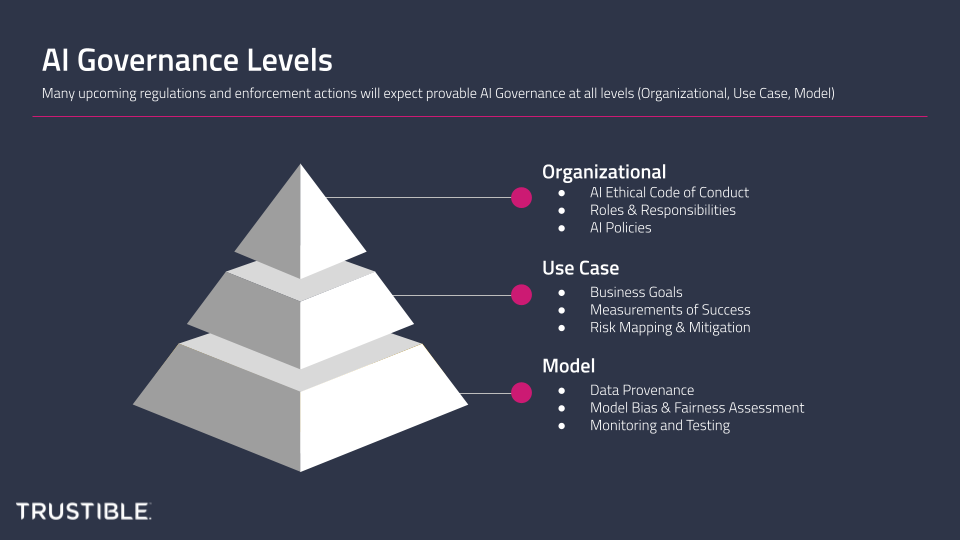

3 Levels of AI Governance – It’s not just about the models!

While AI has been used in enterprise and consumer products for decades, only large tech organizations with sufficient resources were able to implement it at scale. In the past few years, advances in the quality and accessibility of ML systems have led to a rapid proliferation of AI tools in everyday life. The accessibility of these tools means there is a massive need for good AI Governance both by AI providers (e.g. OpenAI), as well as the organizations implementing and deploying AI systems into their own products.

The Trustible Story

Hi there 👋! We’re Trustible – a software company dedicated to enabling organizations to adopt trustworthy & responsible AI. Here’s our story.

AI Governance at Scale: Trustible Becomes Official Databricks Technology Partner

At Trustible, we empower organizations to responsibly build, deploy, and monitor AI systems at scale. Today, we are excited to announce our partnership with Databricks to bring together our leading AI governance platform with their trusted data and AI lakehouse, enabling joint customers to rapidly implement responsible, compliant, and accountable AI. We believe AI practitioners […]

How does China’s approach to AI regulation differ from the US and EU?

Artificial intelligence and geopolitics go hand in hand. On Thursday, July 13th 2023, the Cyberspace Administration of China (CAC) released their “Interim Measures for the Management of Generative Artificial Intelligence Services.” In it, the Chinese government lays out its rules to regulate those who provide generative AI capabilities to the public in China. While many […]

Trustible Announces Prestigious Appointments to its Advisory Board

Pedigree and expertise of new members will drive Trustible’s product development and company growth WASHINGTON, June 21, 2023 /PRNewswire/ — Trustible™, a software company that helps organizations accelerate Responsible AI governance to maximize trust and manage risk, today announced the appointment of several experienced industry leaders to the company’s Advisory Board. The appointment of the […]

Towards a Standard for Model Cards

This blogpost is intended for a technical audience. In it, we cover: The term “Model Card” was coined by Mitchell et al. in the 2018 paper Model Cards for Model Reporting. At their core, Model Cards are the nutrition labels of the AI world, providing instructions and warnings for a trained model. When used, they […]