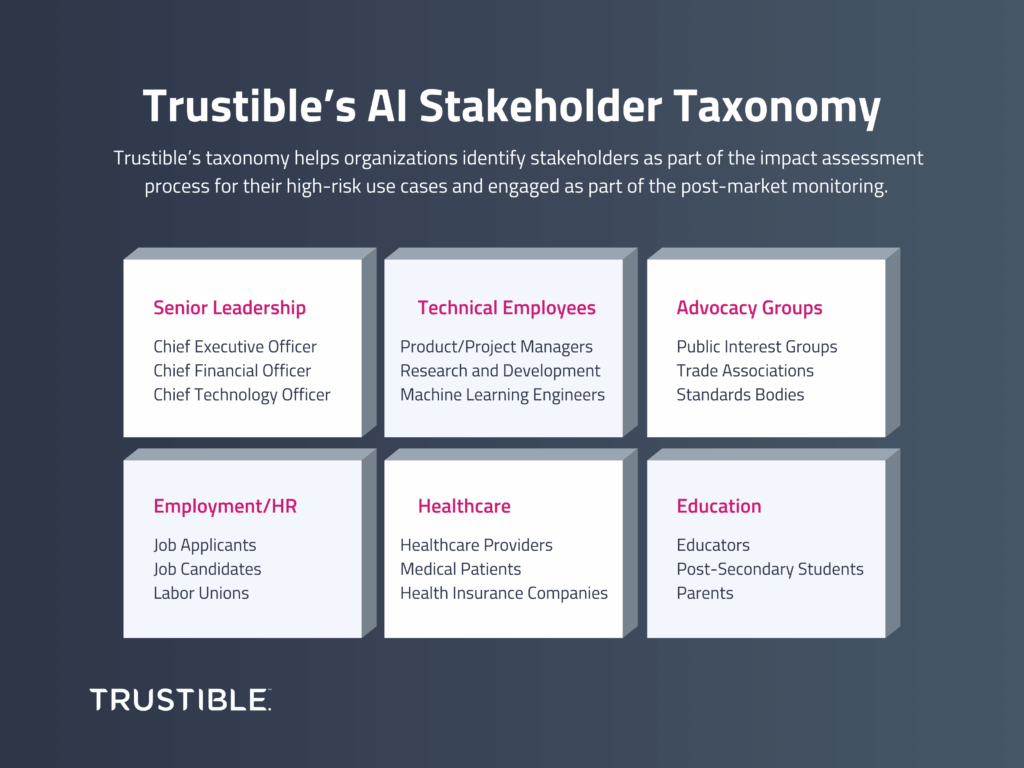

Trustible developed an AI Stakeholder Taxonomy that can help organizations easily identify stakeholders as part of the impact assessment process for their high-risk use cases

Trustible Achieves SOC 2 Type II Compliance Certification`

Today, we’re excited to announce the successful completion of the Service Organization Controls (SOC) 2 Type II audit and certification, which validates Trustible’s robust controls, effective risk management and adherence to industry best practices. The certification follows an extensive audit process conducted by an independent third party, Insight Assurance, affirming that Trustible follows the highest standards of data security and operational integrity.

Introducing Trustible’s New US Insurance AI Framework: Simplifying AI Compliance for Insurers

At Trustible, we understand the challenges insurers face in navigating the evolving AI regulatory landscape, particularly at the state-level in the U.S. That’s why we’re excited to introduce the Trustible US Insurance AI Framework, designed to streamline compliance by synthesizing the latest insurance AI regulations into one comprehensive, easy-to-comply with framework embedded in our platform.

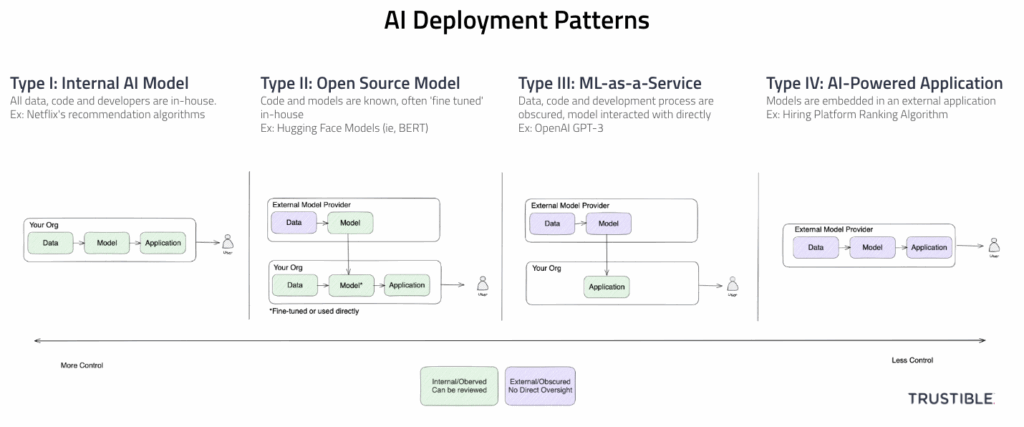

ML Deployment Patterns & Associated AI Governance Challenges

As the deployment of AI becomes pervasive, many teams from across your organization need to get involved with AI Governance, not only the data scientists and engineers. With increasing government regulation and reputational risks, it’s more essential that all stakeholders work with a consistent framework of categorizing different patterns of AI deployments. This blog post offers one high level framework for categorizing different AI deployment patterns and discusses some of the AI Governance challenges associated with each pattern.

Everything you need to know about the NY DFS Insurance Circular Letter No. 7

On July 11, 2024, the New York Department of Financial Services (NY DFS) released its final circular letter on the use of external consumer data and information sources (ECDIS), AI systems, and other predictive models in underwriting and pricing insurance policies and annuity contracts. A circular letter is not a regulation per se, but rather a formalized interpretation of existing laws and regulations by the NY DFS. The finalized guidance comes after the NY DFS sought input on its proposed circular letter, which was published in January 2024.

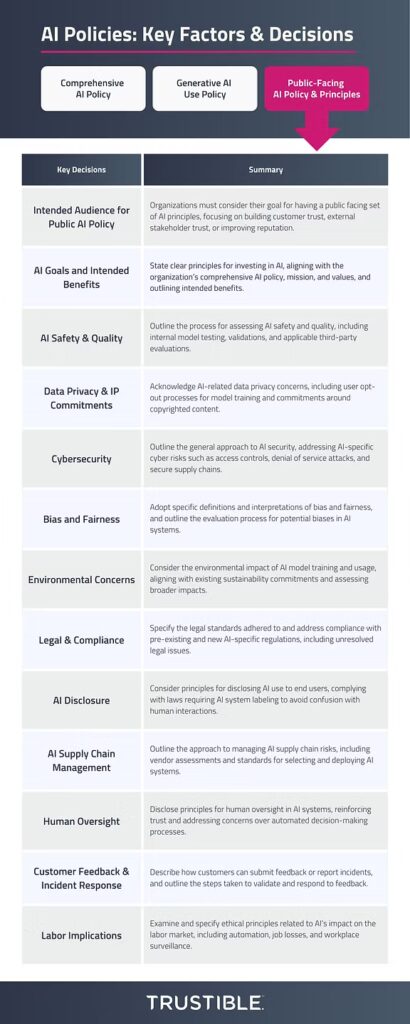

AI Policy Series 3: Drafting Your Public AI Principles Policy

In our final blog post of this AI Policy series (see Comprehensive AI Policy and AI Use Policy guidance posts here), we want to explore what organizations should make available to the public about their use of AI. According to recent research by Pew, 52 percent of Americans feel more concerned than excited by AI. This data demonstrates that, while organizations may understand or realize the value of AI, their users and customers may harbor some skepticism. Policymakers and large AI companies have sought to address public concerns, albeit in their own ways.

AI Policy Series 2: Drafting Your AI Use Policy

In this series’ first blog post, we broke down AI policies into 3 categories: 1) a comprehensive organizational AI policy that includes organizational principles, roles and processes, 2) an AI use policy that outlines what kinds of tools and use cases are allowed, as well as what precautions employees must take when using them, and 3) a public facing AI policy that outlines core ethical principles the organization adopts, as well as their stance on key AI policy stances. In this second blog post on AI policies, we want to explore critical decisions and factors that organizations should consider as they draft their AI use policy.

Trustible Selected for Google for Startups Latino Founders Fund

Trustible is thrilled to announce that it has been selected as a recipient of the prestigious Google for Startups Latino Founders Fund. This funding from Google is a testament to Trustible’s innovation, growth potential, and continued leadership in the field of AI governance.

AI Policy Series 1: Drafting Your Comprehensive AI Policy

As organizations increase their adoption of AI, governance leaders are looking to put in place policies that ensure their AI deployment aligns with their organization’s principles, complies with regulatory standards, and mitigates potential risks. But where to start in developing your policies can oftentimes be overwhelming.

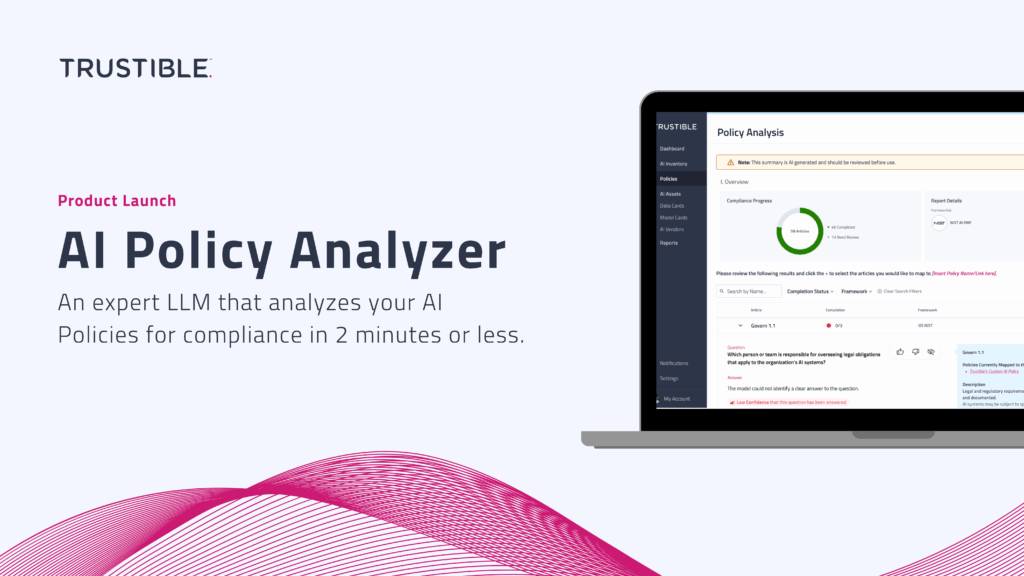

Product Launch: Trustible’s AI Policy Analyzer

For enterprise AI leaders and governance experts, developing Policies to guide the appropriate use and risk mitigation of AI can be a daunting task. Moreover, understanding whether that policy is compliant with AI regulations and standards can be costly, time-consuming, and overwhelming. Trustible’s AI Policy Analyzer is an expert AI system designed to simplify this process, providing an automated analysis of your existing AI policies in just minutes.