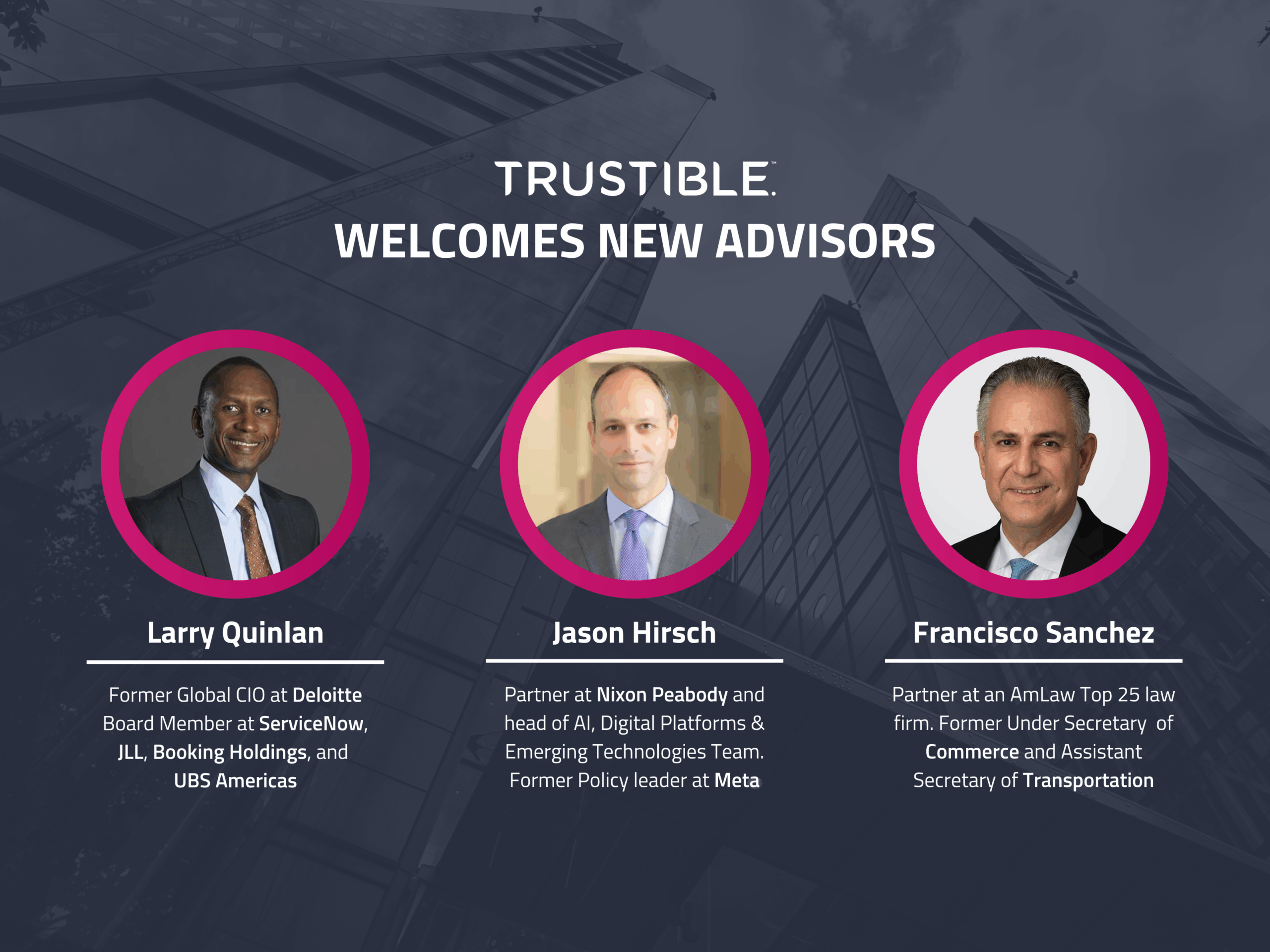

We are thrilled to announce the addition of three new members to the Trustible Advisory Board: Larry Quinlan, Jason D. Hirsch, and Francisco Sánchez. Their deep expertise in AI, enterprise technology, regulatory strategy, and product counseling will guide Trustible customers and leadership on global challenges at the intersection of technology, law, and government policy.

Everything you need to know about Colorado SB 205

On May 17, 2024, Colorado Governor Jared Polis signed the Consumer Protection for Artificial Intelligence (SB 205) into law, the first comprehensive state AI law that imposes rules for certain high risk AI systems. The law requires that AI used to support ‘consequential decisions’ for certain use cases should be treated as ‘high risk’ and will be subject to a range of risk management and reporting requirements. The new rules will come into effect on February 1, 2026.

Enhancing the Effectiveness of AI Governance Committees

Organizations are increasingly deploying artificial intelligence (AI) systems to drive innovation and gain competitive advantages. Effective AI governance is crucial for ensuring these technologies are used ethically, comply with regulations, and align with organizational values and goals. However, as the use of AI and AI regulations become more pervasive, so does the complexity of managing these technologies responsibly.

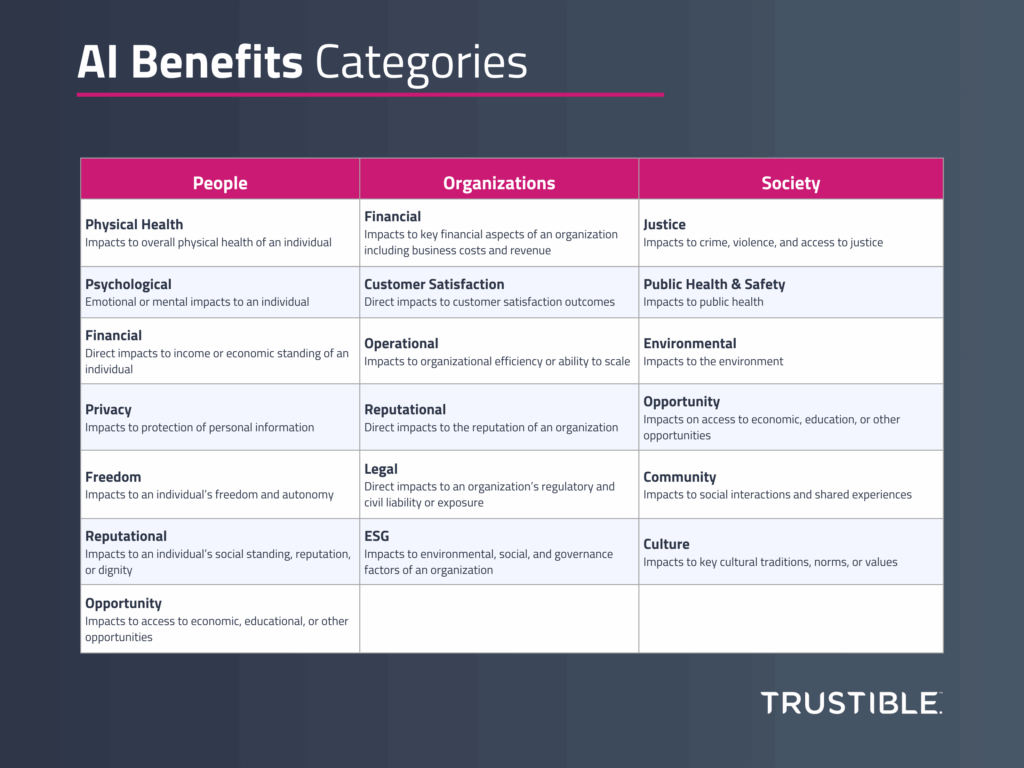

A Framework for Measuring the Benefits of AI

Introduction Significant research has been invested in studying AI risks, a response to the rapid pace of deployment of highly capable AI models across a wide variety of use cases. Over the last year, governments around the world have established AI Safety institutes tasked with developing methodologies to assess the impact and probability of various […]

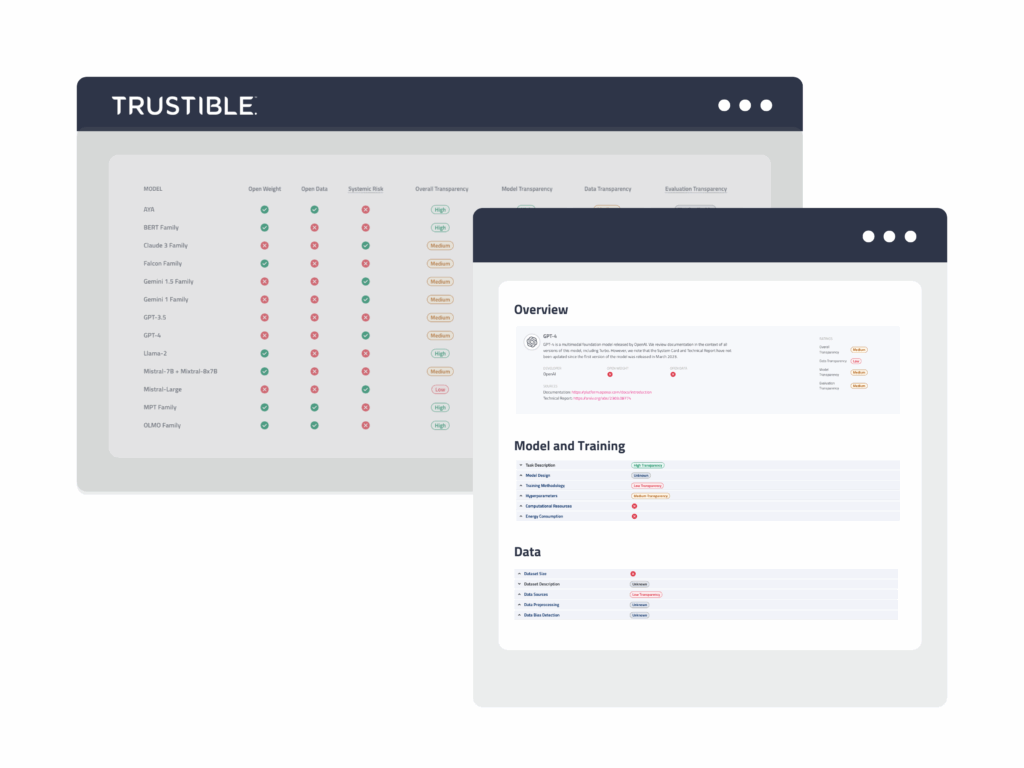

Trustible Announces New Model Transparency Ratings to Enhance AI Model Risk Evaluation

Organizational leaders are looking to better understand what AI models may be best fit for a given use case. However, limited public transparency on these systems makes this evaluation difficult.

In response to the rapid development and deployment of general-purpose AI (GPAI) models, Trustible is proud to introduce its research on Model Transparency Ratings – offering a comprehensive assessment of transparency disclosures of the top 21 Large Language Models (LLMs).

Inside Trustible’s Methodology for Model Transparency Ratings

The speed at which new general purpose AI (GPAI) models are being developed is making it difficult for organizations to select which model to use for a given AI use case. While a model’s performance on task benchmarks, deployment model, and cost are primarily used, other factors, including the data sources, ethical design decisions, and regulatory risks of a model must be accounted for as well. These considerations cannot be inferred from a model’s performance on a benchmark, but are necessary to understand whether using a specific model is appropriate for a given task or legal to use within a jurisdiction.

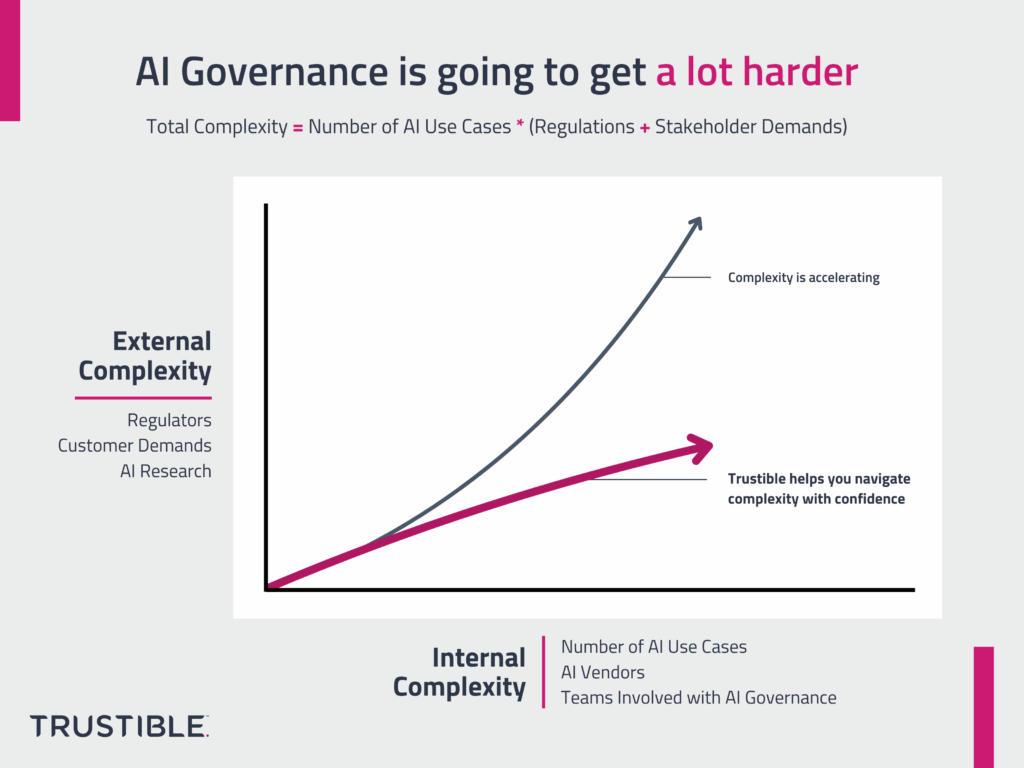

Why AI Governance is going to get a lot harder

AI Governance is hard as it involves collaboration across multiple teams and an understanding of a highly complex technology and its supply chains. It’s about to get even harder. The complexity of AI governance is growing along 2 different dimensions at the same time – both of them are poised to accelerate in the coming […]

Analysis – How Trustible Helps Organizations Comply With The EU AI Act

The EU AI Act sets a global precedent in AI regulation, emphasizing human rights in AI development and implementation of AI systems. While the eventual law will directly apply to EU countries, its extraterritorial reach will impact global businesses in profound ways. Global businesses producing AI-related applications or services that either impact EU citizens or supply EU-based companies will be responsible for complying with the EU AI Act. Failure to comply with the Act can result in fines up to 7% of global turnover or €35m for major violations, with lower penalties for SMEs and startups.

Analysis – Mapping the Requirements of NIST AI RMF, ISO 42001, and the EU AI Act

Navigating the evolving and complex landscape for AI governance requirements can be a real challenge for organizations. Previously, Trustible created this comprehensive cheat sheet comparing three important compliance frameworks: the NIST AI Risk Management Framework, ISO 42001, and the EU AI Act. This easy to understand visual maps the similarities and differences between these frameworks, […]

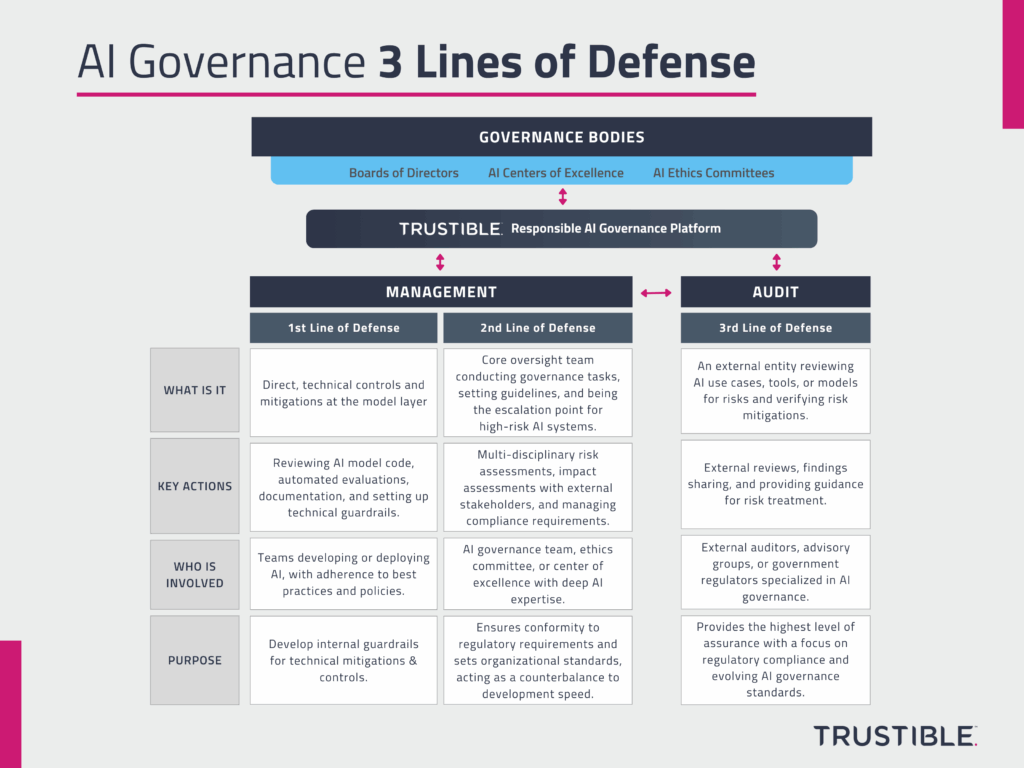

3 Lines of Defense for AI Governance

AI Governance is a complex task as it involves multiple teams across an organization, working to understand and evaluate the risks of dozens of AI use cases, and managing highly complex models with deep supply chains. On top of the organizational and technical complexity, AI can be used for a wide range of purposes, some of which are relatively safe (e.g. email spam filter), while others pose serious risks (e.g. medical recommendation system). Organizations want to be responsible with their AI use, but struggle to balance innovation and adoption of AI for low risk uses, with oversight and risk management for high risk uses. To manage this, organizations need to adopt a multi-tiered governance approach in order to allow for easy, safe experimentation from development teams, with clear escalation points for riskier uses.